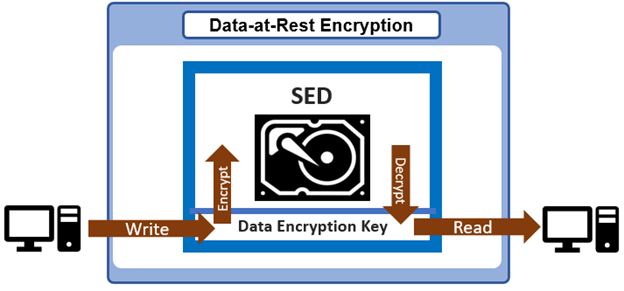

The new OneFS 9.10 release delivers an important data-at-rest encryption (DARE) security enhancement for the PowerScale F-series platforms. Specifically, the OneFS software defined persistent memory (SDPM) journal now supports self-encrypting drives, or SEDs, satisfying the criteria for FIPS 140-3 compliance.

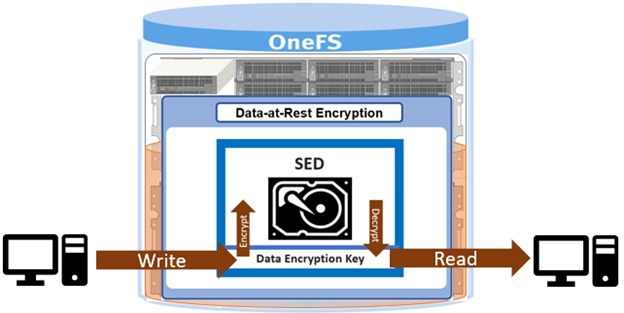

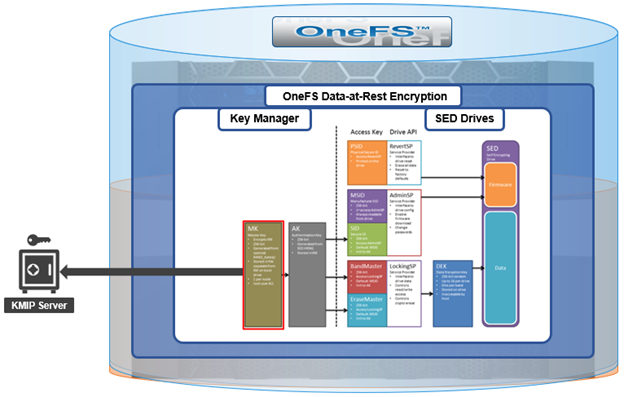

SEDs are secure storage devices which transparently encrypt all on-disk data using an internal key and a drive access password. OneFS uses nodes populated with SED drives in to provide data-at-rest encryption, thereby preventing unauthorized access data access.

All data that is written to a DARE PowerScale cluster is automatically encrypted the moment it is written and decrypted when it is read. Securing on-disk data with cryptography ensures that the data is protected from theft, or other malicious activity, in the event drives or nodes are removed from a cluster.

The OneFS journal is among the most critical components of a PowerScale node. When the OneFS writes to a drive, the data goes straight to the journal, allowing for a fast reply. OneFS uses journaling to ensure consistency across disks locally within a node and also disks across nodes.

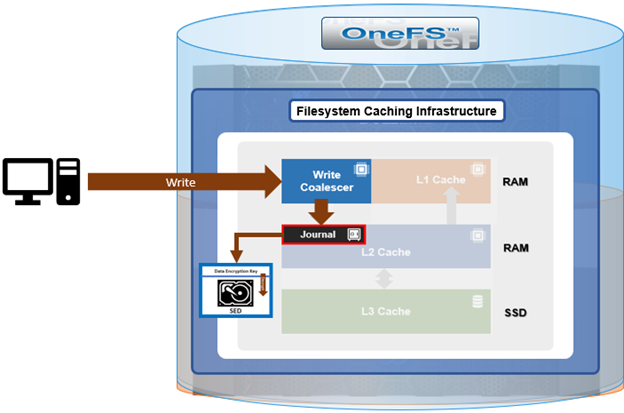

Here’s how the journal fits into the general OneFS caching hierarchy:

Block writes go to the journal prior to being written to disk, and a transaction must be marked as ‘committed’ in the journal before returning success to the file system operation. Once the transaction is committed, the change is guaranteed to be stable. If the node happened to crash or lose power, the changes would still be applied from the journal at mount time via a ‘replay’ process. As such, the journal is battery-backed in order to be available after a catastrophic node event such as a data center power outage.

Operating primarily at the physical level, the journal stores changes to physical blocks on the local node. This is necessary because all initiators in OneFS have a physical view of the file system, and therefore issue physical read and write requests to remote nodes. The OneFS journal supports both 512byte and 8KiB block sizes of 512 bytes for storing written inodes and blocks respectively. By design, the contents of a node’s journal are only needed in a catastrophe, such as when memory state is lost.

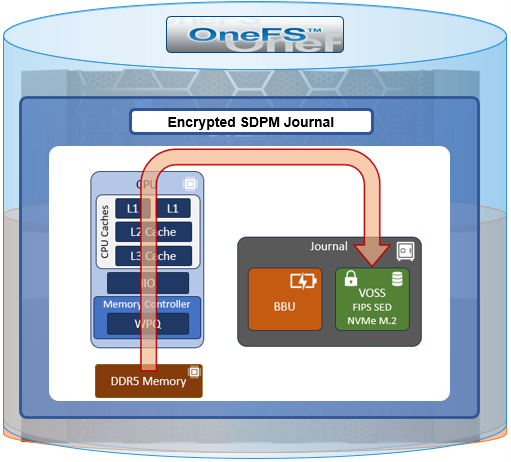

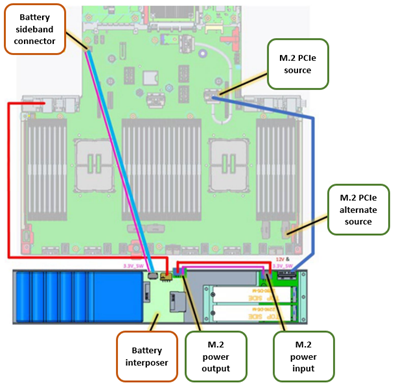

Under the hood. the current PowerScale F-series nodes use an M.2 SSD in conjunction with OneFS’ SDPM solution to provide persistent storage for the file system journal.

This is in contrast to previous generation platforms, which used NVDIMMs.

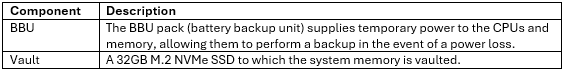

The SDPM itself comprises two main elements:

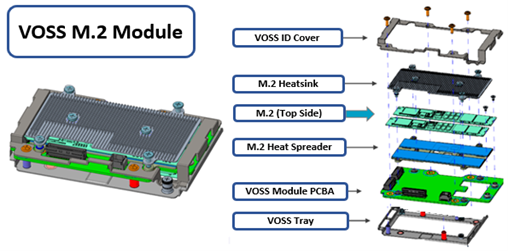

While the BBU is self-contained, the M.2 NVMe vault is housed within a VOSS module, and both components are easily replaced if necessary.

This new OneFS 9.10 functionality enables an encrypted, FIPS compliant, M.2 SSD to be used as the back-end storage for the journal’s persistent memory region. This allows the journal in an F-series to This M.2 drive is also referred to as the ‘vault’ drive, and it sits atop the ‘vault optimized storage subsystem’ or VOSS module, along with the journal battery, etc.

This new functionality enables the transparent use of the M.2 FIPS drive, securing it in tandem with the other FIPS data PCIe drives in the node. This feature also paves the way for requiring specifically FIPS 140-3 drives across the board.

So looking a bit deeper, this new SDMP enhancement for SED nodes uses a FIPS 140-3 certified M.2 SSD within the VOSS module, providing the persistent memory for the PowerScale all-flash F-series platforms.

It also builds upon coordination or BIOS features and functions, coordinating with iDRAC and OneFS coordination with iDRAC itself through the host interface, or HOSA.

And secondarily, this FIPS SDPM feature is instrumental in delivering the SED-3 security level for the F-series nodes. The redefined OneFS SED FIPS framework was discussed at length in the previous article in this series, and the SED-3 level requires FIPS 140-3 compliant drives across the board (ie. for both data storage and journal).

Under the hood, the VOSS drive is secured by iDRAC. iDRAC itself has both a local key manager, or IKLM, function and the secure enterprise key manager, or SEKM. OneFS communicates with these key managers via the HOSA and the Redfish passthrough interfaces, and configures the VOSS drive during node configuration time. Beyond that, OneFS also tears down the VOSS drive the same way it would tear down the storage drives during a node reformat operation.

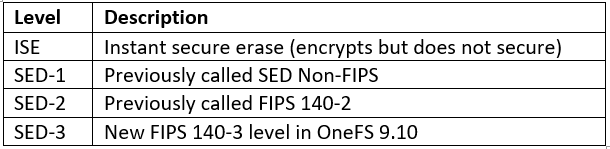

As we saw in the previous blog article, there are now three levels of self-encrypting drives or SEDs in OneFS 9.10, in addition to the standard ISE (instant secure erase) drives:

- SED level 1, previously known as SED non-FIPS.

- SED level 2, which was formerly FIPS 140-2.

- SED level 3, which denotes FIPS 140-3 compliance.

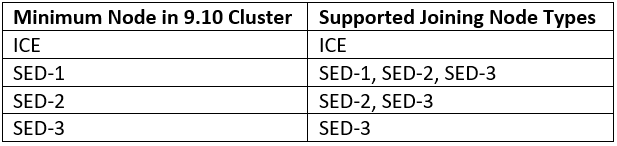

Beyond that, the existing behavior around security of nodes with regards to drive capability and the existing OneFS logic that prevents lesser security nodes from joining higher security clusters. This basic restriction is not materially changed in 9.10, but simply a new higher tier of security, the SED-3 tier, is added. So a cluster running comprising SED-3 nodes running OneFS 9.10 would only disallow any lesser security nodes from joining.

Specifically, the SED-3 designation requires FIPS 140-3 data drives as well as a FIPS 140-3 VOSS drive within the SDMP VOSS module. The presence of incorrect drives would result in ‘wrong type’ errors, the same as with pre 9.10 behavior. So if a node is built with the incorrect VOSS drive, or OneFS is unable to secure it, that node will fail a journal healthcheck during node boot and be automatically blocked from joining the cluster.

SED-3 compliance not only requires the drives to be secure, but actively monitored. OneFS uses its ‘hardware mon’ utility to monitor a node’s drives for correct security state, as well as checking for any unexpected state transitions. If hardware monitor detects any of these, it will trigger a CELOG alert and bring the node down into read-only state. So if a SED-3 node is in read-write state, this indicates it’s fully functional and all is good.

The ‘isi status’ CLI command has a ‘SED compliance level’ parameter which report’s the node’s level, such as SED-3. Alternatively, the ‘isi_psi_tool’ CLI utility can provide more detail on the required compliance level of the data and VOSS drives themselves, as well as node type, etc.

The OneFS hardware monitor CLI utility (isi_hwmon) can be used to check the encryption state of the VOSS drive, and the encryption state values are:

- Unlocked: Safe state, properly secured. Unlocked indicates that iDRAC has authenticated/unlocked VOSS for SDPM read/write usage.

- Locked: Degraded state. Secured but not accessible. VOSS drive is not available for SDPM usage.

- Unencrypted: Degraded state. Not secured.

- Foreign: Degraded state. iLKM is unable to authenticate. Missing Key/PIN or secured by foreign entity.

As such, only ‘unlocked’ represents a healthy state. The other three states (locked, unencrypted, and foreign) indicate an issue, and will result in a read-only node.