The previous article in this series explored building the PowerScale multipath client driver from source on Ubuntu Linux. Now we’ll turn our attention to compiling the driver on the OpenSUSE Linux platform.

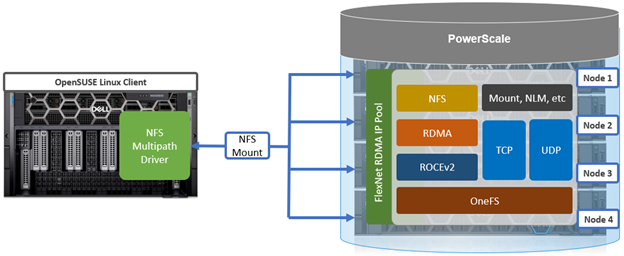

Unlike the traditional one-to-one NFS server/client mapping, this multipath client driver allows the performance of multiple PowerScale nodes to be aggregated through a single NFS mount point to one or many compute nodes.

Building the PowerScale multipath client driver from scratch, rather than just installing it from a pre-built Linux package, helps guard against minor version kernel mismatches on the Linux client that would result in the driver not installing correctly.

The driver itself is available for download on the Dell Support Site. There is no license or cost for this driver, either for the pre-built Linux package or source code. The zipped tarfile download contains a README doc which provides basic instruction.

For an OpenSUSE Linux client to successfully connect to a PowerScale cluster using the multipath driver, there are a couple prerequisites that must be met:

- The NFS client system or virtual machine must be running the following OpenSUSE version:

| Supported Linux Distribution | Kernel Version |

| OpenSUSE 15.4 | 5.14.x |

- If RDMA is being configured, the system must contain an RDMA-capable Ethernet NIC, such as the Mellanox CX series.

- The ‘trace-cmd’ package should be installed, along with NFS client related packages.

For example,:

# zypper install trace-cmd nfs-common

- Unless already installed, developer tools may also need to be added. For example:

# zypper install rpmbuild tar gzip git kernel-devel

The following CLI commands can be used to verify the kernel version and other pertinent details of the OpenSUSE client:

# uname -a

Unless all the Linux clients are known to be identical, the best practice is to build and install the driver per-client or you may experience failed installs.

Overall, the driver source code compilation process is as follows:

- Download the driver source code from the driver download site.

- Unpack the driver source code on the Linux client

Once downloaded, this file can be extracted using the Linux ‘tar’ utility. For example:

# tar -xvf <source_tarfile>

- Build the driver source code on the Linux client

Once downloaded to the Linux client, the multipath driver package source can be built with the following CLI command:

# ./build.sh bin

A successful build is underway when the following output appears on the console:

…<build takes about ten minutes> ------------------------------------------------------------------

When the build is complete, a package file is created in the ./dist directory, located under the top level source code directory.

- Install the driver binaries on the OpenSUSE client

# zypper in ./dist/dellnfs-4.0.22-kernel_5.14.21_150400.24.97_default.x86_64.rpm Loading repository data... Reading installed packages... Resolving package dependencies... The following NEW package is going to be installed: dellnfs 1 new package to install.

- Check installed files

# rpm -qa | grep dell dellnfs-4.0.22-kernel_5.14.21_150400.24.100_default.x86_64

- Reboot

# reboot

- Check services are started

# systemctl start portmap # systemctl start nfs # systemctl status nfs nfs.service - Alias for NFS client Loaded: loaded (/usr/lib/systemd/system/nfs.service; disabled; vendor preset: disabled) Active: active (exited) since Tues 2024-10-15 15:11:09 PST; 2s ago Process: 15577 ExecStart=/bin/true (code=exited, status=0/SUCCESS) Main PID: 15577 (code=exited, status=0/SUCCESS) Oct 15 15:11:09 CLI22 systemd[1]: Starting Alias for NFS client... Oct 15 15:11:09 CLI22 systemd[1]: Finished Alias for NFS client.

- Check client driver is loaded with dellnfs-ctl script

# dellnfs-ctl status version: 4.0.22 kernel modules: sunrpc services: rpcbind.socket rpcbind rpc_pipefs: /var/lib/nfs/rpc_pipefs

Note, however, that when building and installing on an OpenSUSE virtual instance (VM), additional steps are required.

Since OpenSUSE does not reliably install a kernel-devel kit that matches the running kernel version, this must be forced to happen as follows:

- Install dependencies

# zypper install rpmbuild tar gzip git

- Install CORRECT kernel-devel package

The recommended way to install kernel-devel package according to OpenSUSE documentation is to use:

# zypper install kernel-default-devel

Beware that the ‘zypper install kernel-default-devel’ command occasionally fails to install the correct kernel-devel package. This can be verified by looking at the following paths:

# ls /lib/modules/ 5.14.21-150500.55.39-default # uname -a Linux 6f8edb8b881a 5.15.0-91-generic #101~20.04.1-Ubuntu SMP Thu Nov 16 14:22:28 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

Note that the contents of /lib/modules above does not match the ‘uname’ command output:

‘5.14.21-150500.55.39-default’ vs. ‘5.15.0-91-generic’

Another issue with installing with ‘kernel-devel’ is that sometimes the /lib/modules/$(uname -r) directory will not include the /build subdirectory.

If this occurs, the client side driver will fail with the following error:

# ls -alh /lib/modules/$(uname -r)/build ls: cannot access '/lib/modules/5.14.21-150400.24.63-default/build': No such file or directory .... Kernel root not found

The recommendation is to install the specific kernel-devel package for the client’s Linux version. For example:

# ls -alh /lib/modules/$(uname -r) total 5.4M drwxr-xr-x 1 root root 488 Dec 13 19:37 . drwxr-xr-x 1 root root 164 Dec 13 19:42 .. drwxr-xr-x 1 root root 94 May 3 2023 kernel drwxr-xr-x 1 root root 60 Dec 13 19:37 mfe_aac -rw-r--r-- 1 root root 1.2M May 9 2023 modules.alias -rw-r--r-- 1 root root 1.2M May 9 2023 modules.alias.bin -rw-r--r-- 1 root root 6.4K May 3 2023 modules.builtin -rw-r--r-- 1 root root 17K May 9 2023 modules.builtin.alias.bin -rw-r--r-- 1 root root 8.2K May 9 2023 modules.builtin.bin -rw-r--r-- 1 root root 49K May 3 2023 modules.builtin.modinfo -rw-r--r-- 1 root root 610K May 9 2023 modules.dep -rw-r--r-- 1 root root 809K May 9 2023 modules.dep.bin -rw-r--r-- 1 root root 455 May 9 2023 modules.devname -rw-r--r-- 1 root root 802 May 3 2023 modules.fips -rw-r--r-- 1 root root 181K May 3 2023 modules.order -rw-r--r-- 1 root root 1.2K May 9 2023 modules.softdep -rw-r--r-- 1 root root 610K May 9 2023 modules.symbols -rw-r--r-- 1 root root 740K May 9 2023 modules.symbols.bin drwxr-xr-x 1 root root 36 May 9 2023 vdso # rpm -qf /lib/modules/$(uname -r)/ kernel-default-5.14.21-150400.24.63.1.x86_64 <--------------------- kernel-default-extra-5.14.21-150400.24.63.1.x86_64 kernel-default-optional-5.14.21-150400.24.63.1.x86_64

Take the package name and prefix it with ‘kernel-default-devel’:

==================================================================== # zypper install kernel-default-devel-5.14.21-150400.24.63.1.x86_64 Loading repository data... Reading installed packages... The selected package 'kernel-default-devel-5.14.21-150400.24.63.1.x86_64' from repository 'Update repository with updates from SUSE Linux Enterprise 15' has lower version than the installed one. Use 'zypper install --oldpackage kernel-default-devel-5.14.21-150400.24.63.1.x86_64' to force installation of the package. Resolving package dependencies... Nothing to do. # zypper install --oldpackage kernel-default-devel-5.14.21-150400.24.63.1.x86_64 Loading repository data... Reading installed packages... Resolving package dependencies... The following 2 NEW packages are going to be installed: kernel-default-devel-5.14.21-150400.24.63.1 kernel-devel-5.14.21-150400.24.63.1 2 new packages to install.

Now the build directory exists:

# ls -alh /lib/modules/$(uname -r) total 5.4M drwxr-xr-x 1 root root 510 Dec 13 19:46 . drwxr-xr-x 1 root root 164 Dec 13 19:42 .. lrwxrwxrwx 1 root root 54 May 3 2023 build -> /usr/src/linux-5.14.21-150400.24.63-obj/x86_64/default drwxr-xr-x 1 root root 94 May 3 2023 kernel drwxr-xr-x 1 root root 60 Dec 13 19:37 mfe_aac

It is less likely you will run into this if you run ‘zypper update’ first. Note that this can take more than fifteen minutes to complete.

Next, reboot the Linux client and then run:

# zypper install kernel-default-devel

In the next and final article of this series, we’ll be looking at the configuration and management of the multipath client driver.