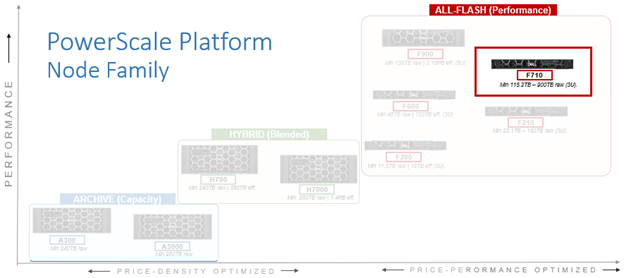

In this article, we’ll turn our focus to the new PowerScale F710 hardware node that was launched a couple of weeks back. Here’s where this new platform lives in the current hardware hierarchy:

The PowerScale F710 is a high-end all-flash platform that utilizes a dual-socket 4th gen Zeon processor with 512GB of memory and ten NVMe drives, all contained within a 1RU chassis. Thus, the F710 offers a substantial hardware evolution from previous generations, while also focusing on environmental sustainability, reducing power consumption and carbon footprint, while delivering blistering performance. This makes the F710 and ideal candidate for demanding workloads such as M&E content creation and rendering, high concurrency and low latency workloads such as chip design (EDA), high frequency trading, and all phases of generative AI workflows, etc.

An F710 cluster can comprise between 3 and 252 nodes. Inline data reduction, which incorporates compression, dedupe, and single instancing, is also included as standard to further increase the effective capacity.

The F710 is based on the 1U R660 PowerEdge server platform, with dual socket Intel Sapphire Rapids CPUs. Front-End networking options include 10/25 GbE and with 100 GbE for the Back-End network. As such, the F710’s core hardware specifications are as follows:

| Attribute | F710 Spec |

| Chassis | 1RU Dell PowerEdge R660 |

| CPU | Dual socket, 24 core Intel Sapphire Rapids 6442Y @2.6GHz |

| Memory | 512GB Dual rank DDR5 RDIMMS (16 x 32GB) |

| Journal | 1 x 32GB SDPM |

| Front-end network | 2 x 100GbE or 25GbE |

| Back-end network | 2 x 100GbE |

| NVMe SSD drives | 10 |

These node hardware attributes can be easily viewed from the OneFS CLI via the ‘isi_hw_status’ command. Also note that, at the current time, the F710 is only available in a 512GB memory configuration.

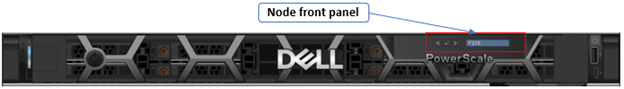

Starting at the business end of the node, the front panel allows the user to join an F710 to a cluster and displays the node’s name once it has successfully joined.

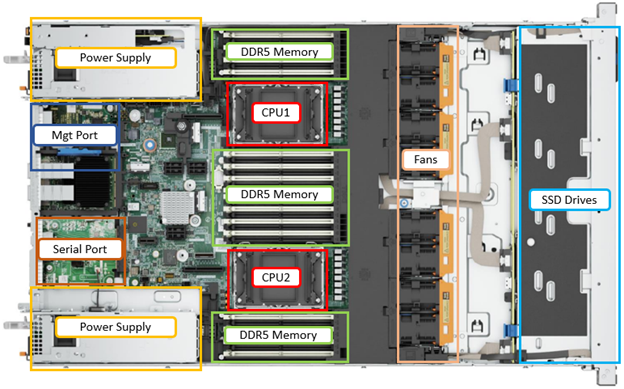

Removing the top cover, the internal layout of the F710 chassis is as follows:

The Dell ‘Smart Flow’ chassis is specifically designed for balanced airflow, and enhanced cooling is primarily driven by four dual-fan modules. Additionally, the redundant power supplies also contain their own air flow apparatus and can be easily replaced from the rear without opening the chassis.

For storage, each PowerScale F710 node contains ten NVMe SSDs, which are currently available in the following capacities and drive styles:

| Standard drive capacity | SED-FIPS drive capacity | SED-non-FIPS drive capacity |

| 3.84 TB TLC | 3.84 TB TLC | |

| 7.68 TB TLC | 7.68 TB TLC | |

| 15.36 TB QLC | Future availability | 15.36 TB QLC |

| 30.72 TB QLC | Future availability | 30.72 TB QLC |

Note that 15.36TB and 30.72TB SED-FIPS drive options are planned for future release.

Drive subsystem-wise, the PowerScale F710 1RU chassis is fully populated with ten NVMe SSDs. These are housed in drive bays spread across the front of the node as follows:

This is in contrast to, and provides improved density over its predecessor, the F600, which contains eight NVMe drives per node.

The NVMe drive connectivity is across PCIe lanes, and these drives use the NVMe and NVD drivers. The NVD is a block device driver that exposes an NVMe namespace like a drive and is what most OneFS operations act upon, and each NVMe drive has a /dev/nvmeX, /dev/nvmeXnsX and /dev/nvdX device entry and the locations are displayed as ‘bays’. Details can be queried with OneFS CLI drive utilities such as ‘isi_radish’ and ‘isi_drivenum’. For example:

# isi_drivenum Bay 0 Unit 15 Lnum 9 Active SN:S61DNE0N702037 /dev/nvd5 Bay 1 Unit 14 Lnum 10 Active SN:S61DNE0N702480 /dev/nvd4 Bay 2 Unit 13 Lnum 11 Active SN:S61DNE0N702474 /dev/nvd3 Bay 3 Unit 12 Lnum 12 Active SN:S61DNE0N702485 /dev/nvd2 Bay 4 Unit 19 Lnum 5 Active SN:S61DNE0N702031 /dev/nvd9 Bay 5 Unit 18 Lnum 6 Active SN:S61DNE0N702663 /dev/nvd8 Bay 6 Unit 17 Lnum 7 Active SN:S61DNE0N702726 /dev/nvd7 Bay 7 Unit 16 Lnum 8 Active SN:S61DNE0N702725 /dev/nvd6 Bay 8 Unit 23 Lnum 1 Active SN:S61DNE0N702718 /dev/nvd1 Bay 9 Unit 22 Lnum 2 Active SN:S61DNE0N702727 /dev/nvd10

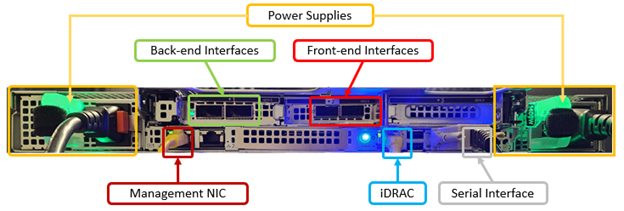

Moving to the back of the chassis, the rear of the F710 contains the power supplies, network, and management interfaces, which are arranged as follows:

The F710 nodes are available in the following networking configurations, with a 25/100Gb ethernet front-end and 100Gb ethernet back-end:

| Front-end NIC | Back-end NIC | F710 NIC Support |

| 100GbE | 100GbE | Yes |

| 100GbE | 25GbE | No |

| 25GbE | 100GbE | Yes |

| 25GbE | 25GbE | No |

Note that, like the F210, an Infiniband backend is not supported on the F710 at the current time.

Compared with its F600 predecessor, the F710 sees a number of hardware performance upgrades. These include a move to PCI Gen5, Gen 4 NVMe, DDR5 memory, Sapphire Rapids CPU, and a new software-defined persistent memory file system journal ((SPDM). Also the 1GbE management port has moved to Lan-On-Motherboard (LOM), whereas the DB9 serial port is now on a RIO card. Firmware-wise, the F710 and OneFS 9.7 require a minimum of NFP 12.0.

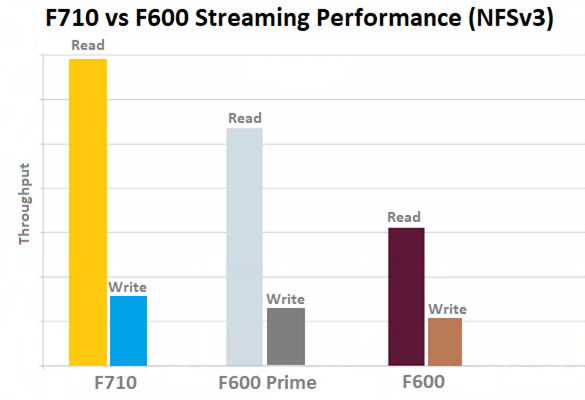

In terms of performance, the new F710 provides a considerable leg up on both the previous generation F600 and F600 prime. This is particularly apparent with NFSv3 streaming reads, as can be seen below:

Given its additional drives (ten SSDs versus eight for the F600s) plus this performance disparity, the F710 does not currently have any other compatible node types. This means that, unlike the F210, the minimum F710 configuration requires the addition of a three node pool.