There have been a several recent questions from the field around how a cluster manages space reservation and pre-allocation of capacity for data repair and drive rebuilds.

OneFS provides a mechanism called Virtual Hot Spare (VHS), which helps ensure that node pools maintain enough free space to successfully re-protect data in the event of drive failure.

Although globally configured, Virtual Hot Spare actually operates at the node pool level so that nodes with different size drives reserve the appropriate VHS space. This helps ensure that, while data may move from one disk pool to another during repair, it remains on the same class of storage. VHS reservations are cluster wide and configurable as either a percentage of total storage (0-20%) or as a number of virtual drives (1-4). To achieve this, the reservation mechanism allocates a fraction of the node pool’s VHS space in each of its constituent disk pools.

No space is reserved for VHS on SSDs unless the entire node pool consists of SSDs. This means that a failed SSD may have data moved to HDDs during repair, but without adding additional configuration settings. This avoids reserving an unreasonable percentage of the SSD space in a node pool.

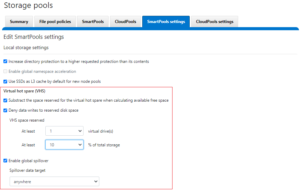

The default for new clusters is for Virtual Hot Spare to have both “subtract the space reserved for the virtual hot spare…” and “deny new data writes…” enabled with one virtual drive. On upgrade, existing settings are maintained.

It is strongly encouraged to keep Virtual Hot Spare enabled on a cluster, and a best practice is to configure 10% of total storage for VHS. If VHS is disabled and you upgrade OneFS, VHS will remain disabled. If VHS is disabled on your cluster, first check to ensure the cluster has sufficient free space to safely enable VHS, and then enable it.

VHS can be configured via the OneFS WebUI, and is always available, regardless of whether SmartPools has been licensed on a cluster. For example:

From the CLI, the cluster’s VHS configuration are part of the storage pool settings, and can be viewed with the following syntax:

# isi storagepool settings view Automatically Manage Protection: files_at_default Automatically Manage Io Optimization: files_at_default Protect Directories One Level Higher: Yes Global Namespace Acceleration: disabled Virtual Hot Spare Deny Writes: Yes Virtual Hot Spare Hide Spare: Yes Virtual Hot Spare Limit Drives: 1 Virtual Hot Spare Limit Percent: 10 Global Spillover Target: anywhere Spillover Enabled: Yes SSD L3 Cache Default Enabled: Yes SSD Qab Mirrors: one SSD System Btree Mirrors: one SSD System Delta Mirrors: one

Similarly, the following command will set the cluster’s VHS space reservation to 10%.

# isi storagepool settings modify --virtual-hot-spare-limit-percent 10

Bear in mind that reservations for virtual hot sparing will affect spillover. For example, if VHS is configured to reserve 10% of a pool’s capacity, spillover will occur at 90% full.

The VHS percentage parameter is governed by the following sysctl:

# sysctl efs.bam.disk_pool_min_vhs_pct efs.bam.disk_pool_min_vhs_pct: 10

There’s also a related sysctl that constrains the upper VHS bounds to a maximum of 50% by default:

# sysctl efs.bam.disk_pool_max_vhs_pct efs.bam.disk_pool_max_vhs_pct: 50

Spillover allows data that is being sent to a full pool to be diverted to an alternate pool. Spillover is enabled by default on clusters that have more than one pool. If you have a SmartPools license on the cluster, you can disable Spillover. However, it is recommended that you keep Spillover enabled. If a pool is full and Spillover is disabled, you might get a “no space available” error but still have a large amount of space left on the cluster.

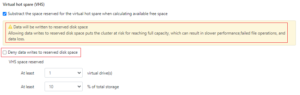

If the cluster is inadvertently configured to allow data writes to the reserved VHS space, the following informational warning will be displayed in the SmartPools WebUI:

There is also no requirement for reserved space for snapshots in OneFS. Snapshots can use as much or little of the available file system space as desirable and necessary.

A snapshot reserve can be configured if preferred, although this will be an accounting reservation rather than a hard limit and is not a recommend best practice. If desired, snapshot reserve can be set via the OneFS command line interface (CLI) by running the ‘isi snapshot settings modify –reserve’ command.

For example, the following command will set the snapshot reserve to 10%:

# isi snapshot settings modify --reserve 10

It’s worth noting that the snapshot reserve does not constrain the amount of space that snapshots can use on the cluster. Snapshots can consume a greater percentage of storage capacity specified by the snapshot reserve.

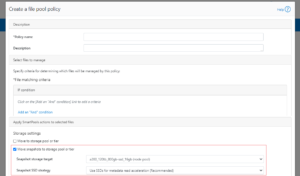

Additionally, when using SmartPools, snapshots can be stored on a different node pool or tier than the one the original data resides on.

For example, as above, the snapshots taken on a performance aligned tier can be physically housed on a more cost effective archive tier.