In this article in the series, we turn our attention to the specifics of configuring a PowerScale cluster for NFS over RDMA.

On the OneFS side, the PowerScale cluster hardware must meet certain prerequisite criteria in order to use NFS over RDMA. Specifically:

| Requirement | Details |

| Node type | F210, F200, F600, F710, F900, F910, F800, F810, H700, H7000, A300, A3000 |

| Network card (NIC) | NVIDIA Mellanox ConnectX-3 Pro, ConnectX-4, ConnectX-5, ConnectX-6 network adapters which support 25/40/100 GigE connectivity. |

| OneFS version | OneFS 9.2 or later for NFSv3 over RDMA, and OneFS 9.8 for NFSv4.x over RDMA. |

The following procedure can be used to configure the cluster for NFS over RDMA:

- First, from the OneFS CLI, verify which of the cluster’s front-end network interfaces support the ROCEv2 capability. This can be determined by running the following CLI command to find the interfaces that report ‘SUPPORTS_RDMA_RRoCE’. For example:

# isi network interfaces list -v IP Addresses: 10.219.64.16, 10.219.64.22 LNN: 1 Name: 100gige-1 NIC Name: mce3 Owners: groupnet0.subnet0.pool0, groupnet0.subnet0.testpool1 Status: Up VLAN ID: - Default IPv4 Gateway: 10.219.64.1 Default IPv6 Gateway: - MTU: 9000 Access Zone: zone1, System Flags: SUPPORTS_RDMA_RRoCE Negotiated Speed: 40Gbps -------------------------------------------------------------------------------- <snip>

Note that there is currently no WebUI equivalent for this CLI command.

- Next, create an IP pool that contains the ROCEv2 capable network interface(s) from the OneFS CLI. For example:

# isi network pools create --id=groupnet0.40g.40gpool1 --ifaces=1:40gige- 1,1:40gige-2,2:40gige-1,2:40gige-2,3:40gige-1,3:40gige-2,4:40gige-1,4:40gige-2 --ranges=172.16.200.129-172.16.200.136 --access-zone=System --nfs-rroce-only=true

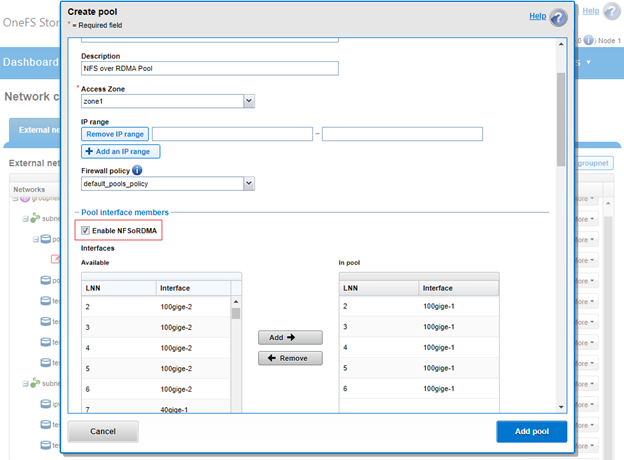

Or via the OneFS WebUI by navigating to Cluster management > Network configuration:

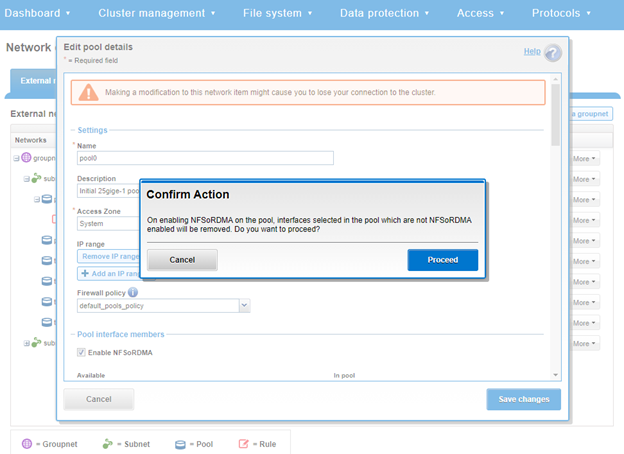

Note that, when configuring the ‘Enable NFSoRDMA’ setting, the following action confirmation warning will be displayed informing that any non-RDMA-capable NICs will be automatically removed from the pool:

- Enable the cluster NFS service, the NFSoRDMA functionality (transport), and the desired protocol versions, by running the following CLI commands.

# isi nfs settings global modify –-nfsv3-enabled=true -–nfsv4-enabled=true -–nfsv4.1-enabled=true -–nfsv4-enabled=true --nfs-rdma-enabled=true

# isi services nfs enable

In the example above, all the supported NFS protocol versions (v3, v4.0, v4.1, and v4.2) have been enabled in addition to RDMA transport.

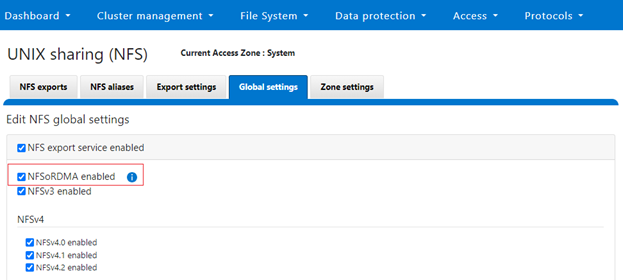

Similarly, from the WebUI under Protocols > UNIX sharing (NFS) > Global settings.

Note that OneFS checks to ensure that the cluster’s NICs are RDMA-capable before allowing the NFSoRDMA setting to be enabled.

- Finally, create the NFS export via the following CLI syntax:

# isi nfs exports create --paths=/ifs/export_rdma

Or from the WebUI under Protocols > UNIX sharing (NFS) > NFS exports.

Note that NFSv4.x over RDMA will only work after an upgrade to OneFS 9.8 has been committed. Also, if the NFSv3 over RDMA ‘nfsv3-rdma-enabled’ configuration option was already enabled before upgrading to OneFS 9.8 , this will be automatically converted with no client disruption to the new ‘nfs-rdma-enabled=true’ setting, which applies to both NFSv3 and NFSv4.