Under normal conditions, OneFS typically relies on checksums, identity fields, and magic numbers to verify file system health and correctness. Within OneFS, system and data integrity, can be subdivided into four distinct phases:

![]()

Here’s what each of these phases entails:

| Phase | Description |

| Detection | The act of scanning the file system and detecting data block instances that are not what OneFS expects to see at that logical point. Internally, OneFS stores a checksum or IDI (Isi data integrity) for every allocated block under /ifs. |

| Enumeration | Enumeration involves notifying the cluster administrator of any file system damage uncovered in the detection phase. For example, logging to the /var/log/idi.log file. System panics may also be noticed if the damage identified is not one that OneFS can reasonably recover from. |

| Isolation | Isolation is the act of cauterizing the file system, ensuring that any damage identified during the detection phase does not spread beyond the file(s) that are already affected. This usually involves removing all references to the file(s) from the file system. |

| Repair | Removing and repairing any damage discovered and removing the “corruption” from OneFS. Typically a DSR (Dynamic Sector recovery) is all that is required to rebuild a block that fails IDI.

|

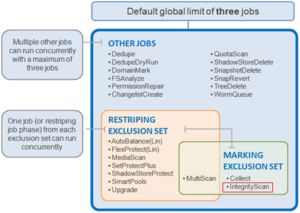

Focused on the detection phase, the primary OneFS tool for uncovering system integrity issues is IntegrityScan. This job is run on across the cluster to discover instances of damaged files, and provide an estimate of the spread of the damage.

Unlike traditional ‘fsck’ style file system integrity checking tools (including OneFS’ isi_cpr utility), IntegrityScan is explicitly designed to run while the cluster is fully operational – thereby removing the need for any downtime. It does this by systematically reading every block and verifying its associated checksum. In the event that IntegrityScan detects a checksum mismatch, it generates an alert, logs the error to the IDI logs (/var/log/idi.log), and provides a full report upon job completion.

IntegrityScan is typically run manually if the integrity of the file system is ever in doubt. By default, the job runs at am impact level of ‘Medium’ and a priority of ‘1’ and accesses the file system via a LIN scan. Although IntegrityScan itself may take several hours or days to complete, the file system is online and completely available during this time. Additionally, like all phases of the OneFS job engine, IntegrityScan can be re-prioritized, paused or stopped, depending on its impact to cluster operations. Along with Collect and MultiScan, IntegrityScan is part of the job engine’s Marking exclusion set.

OneFS can only accommodate a single marking job at any point in time. However, since the file system is fully journalled, IntegrityScan is only needed in exceptional situations. There are two principle use cases for IntegrityScan:

- Identifying and repairing corruption on a production cluster. Certain forms of corruption may be suggestive of a bug, in which case IntegrityScan can be used to determine the scope of the corruption and the likelihood of spreading. It can also fix some forms of corruption.

- Repairing a file system after a lost journal. This use case is much like traditional fsck. This scenario should be treated with care as it is not guaranteed that IntegrityScan fixes everything. This is a use case that will require additional product changes to make feasible.

IntegrityScan can be initiated manually, on demand. The following CLI syntax will kick off a manual job run:

# isi job start integrityscan Started job [283]

# isi job list ID Type State Impact Pri Phase Running Time ------------------------------------------------------------ 283 IntegrityScan Running Medium 1 1/2 1s ------------------------------------------------------------ Total: 1

With LIN scan jobs, even though the metadata is of variable size, the job engine can fairly accurately predict how much effort will be required to scan all LINs. The IntegrityScan job’s progress can be tracked via a CLI command, as follows:

# isi job jobs view 283 ID: 283 Type: IntegrityScan State: Running Impact: Medium Policy: MEDIUM Pri: 1 Phase: 1/2 Start Time: 2020-12-05T22:20:58 Running Time: 31s Participants: 1, 2, 3 Progress: Processed 947 LINs and approx. 7464 MB: 867 files, 80 directories; 0 errors LIN & SIN Estimate based on LIN & SIN count of 3410 done on Dec 5 22:00:10 2020 (LIN) and Dec 5 22:00:10 2020 (SIN) LIN & SIN Based Estimate: 1m 12s Remaining (27% Complete) Block Based Estimate: 10m 47s Remaining (4% Complete) Waiting on job ID: - Description:

The LIN (logical inode) statistics above include both files and directories.

Be aware that the estimated LIN percentage can occasionally be misleading/anomalous. If concerned, verify that the stated total LIN count is roughly in line with the file count for the cluster’s dataset. Even if the LIN count is in doubt. The estimated block progress metric should always be accurate and meaningful.

If the job is in its early stages and no estimation can be given (yet), isi job will instead report its progress as ‘Started’. Note that all progress is reported per phase.

A job’s resource usage can be traced from the CLI as such:

# isi job statistics view Job ID: 283 Phase: 1 CPU Avg.: 30.27% Memory Avg. Virtual: 302.27M Physical: 24.04M I/O Ops: 2223069 Bytes: 16.959G

Finally, upon completion, the IntegrityScan job report, detailing both job stages, can be viewed by using the following CLI command with the job ID as the argument:

# isi job reports view 283 IntegrityScan[283] phase 1 (2020-12-05T22:34:56) ------------------------------------------------ Elapsed time 838 seconds (13m58s) Working time 838 seconds (13m58s) Errors 0 LINs traversed 3417 LINs processed 3417 SINs traversed 0 SINs processed 0 Files seen 3000 Directories seen 415 Total bytes 178641757184 bytes (166.373G) IntegrityScan[283] phase 2 (2020-12-05T22:34:56) ------------------------------------------------ Elapsed time 0 seconds Working time 0 seconds Errors 0 LINs traversed 0 LINs processed 0 SINs traversed 0 SINs processed 0 Files seen 0 Directories seen 0 Total bytes 0 bytes

In addition to the IntegrityScan job, OneFS also contains an ‘isi_iscan_report’ utility. This is a tool to collate the errors from IDI log files (/var/log/idi.log) generated on different nodes. It generates a report file which can be used as an input to ‘isi_iscan_query’ tool. Additionally, it reports the number of errors seen for each file containing IDI errors. At the end of the run, a report file can be found at /ifs/.ifsvar/idi/tjob.<pid>/log.repo.

The associated ‘isi_iscan_query’ utility can then be used to parse the log.repo report file and filter by node, time range, or block address (baddr). The syntax for the isi_iscan_query tool is:

/usr/sbin/isi_iscan_query filename [FILTER FIELD] [VALUE] FILTER FIELD: node <logical node number> e.g. 1, 2, 3, ... timerange <start time> <end time> e.g. 2020-12-05T17:38:02Z 2020-12-06T17:38:56Z baddr <block address> e.g. 2,1,185114624:8192