In the second of this series of articles on data inlining, we’ll shift the focus to monitoring and performance.

The storage efficiency potential of inode inlining can be significant for data sets comprising large numbers of small files, which would have required a separate inode and data blocks for housing these files prior to OneFS 9.3.

Latency-wise, the write performance for inlined file writes is typically comparable or slightly better as compared to regular files, because OneFS does not have to allocate extra blocks and protect them. This is also true for reads, too, since OneFS doesn’t have to search for and retrieve any blocks beyond the inode itself. This also frees up space in the OneFS read caching layers, as well as on disk, in addition to requiring fewer CPU cycles.

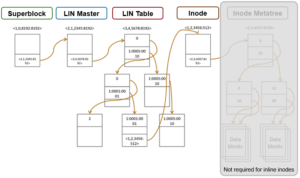

The following diagram illustrates the levels of indirection a file access request takes to get to its data. Unlike a standard file, an inline file will skip the later stages of the path which involve the inode metatree redirection to the remote data blocks.

Access starts with the Superblock, which is located at multiple fixed block addresses on every drive in the cluster. The Superblock contains the address locations of the LIN Master block, which contains the root of the LIN B+ Tree (LIN table). The LIN B+Tree maps logical inode numbers to the actual inode addresses on disk, which, in the case of an inlined file, also contains the data. This saves the overhead of finding the address locations of the file’s data blocks and retrieving data from them.

For hybrid nodes with sufficient SSD capacity, using the metadata-write SSD strategy will automatically place inlined small files on flash media. However, since the SSDs on hybrid nodes default to 512byte formatting, when using metadata read/write strategies, these SSD metadata pools will need to have the ‘–force-8k-inodes’ flag set in order for files to be inlined. This can be a useful performance configuration for small file HPC workloads, such as EDA, for data that is not residing on an all-flash tier. But keep in mind that forcing 8KB inodes on a hybrid pool’s SSDs will result in a considerable reduction in available inode capacity than would be available with the default 512 byte inode configuration.

The OneFS ‘isi_drivenum’ CLI command can be used to verify the drive block sizes in a node. For example, below is the output for a PowerScale Gen6 H-series node, showing drive bay 1 containing an SSD with 4KB physical formatting and 512byte logical sizes, and bays A to E comprising hard disks (HDDs) with both 4KB logical and physical formatting.

# isi_drivenum -bz Bay 1 Physical Block Size: 4096 Logical Block Size: 512 Bay 2 Physical Block Size: N/A Logical Block Size: N/A Bay A0 Physical Block Size: 4096 Logical Block Size: 4096 Bay A1 Physical Block Size: 4096 Logical Block Size: 4096 Bay A2 Physical Block Size: 4096 Logical Block Size: 4096 Bay B0 Physical Block Size: 4096 Logical Block Size: 4096 Bay B1 Physical Block Size: 4096 Logical Block Size: 4096 Bay B2 Physical Block Size: 4096 Logical Block Size: 4096 Bay C0 Physical Block Size: 4096 Logical Block Size: 4096 Bay C1 Physical Block Size: 4096 Logical Block Size: 4096 Bay C2 Physical Block Size: 4096 Logical Block Size: 4096 Bay D0 Physical Block Size: 4096 Logical Block Size: 4096 Bay D1 Physical Block Size: 4096 Logical Block Size: 4096 Bay D2 Physical Block Size: 4096 Logical Block Size: 4096 Bay E0 Physical Block Size: 4096 Logical Block Size: 4096 Bay E1 Physical Block Size: 4096 Logical Block Size: 4096 Bay E2 Physical Block Size: 4096 Logical Block Size: 4096

Note that the SSD disk pools used in PowerScale hybrid nodes that are configured for meta-read or meta-write SSD strategies use 512 byte inodes by default. This can significantly save space on these pools, as they often have limited capacity. However, it will prevent data inlining from occurring. By contrast, PowerScale all-flash nodepools are configured by default for 8KB inodes.

The OneFS ‘isi get’ CLI command provides a convenient method to verify which size inodes are in use in a given node pool. The command’s output includes both the inode mirrors size and the inline status of a file.

When it comes to efficiency reporting, OneFS 9.3 provides three CLI tools for validating and reporting the presence and benefits of data inlining, namely:

- The ‘isi statistics data-reduction’ CLI command has been enhanced to report inlined data metrics, including both a capacity saved and an inlined data efficiency ratio:

# isi statistics data-reduction Recent Writes Cluster Data Reduction (5 mins) --------------------- ------------- ---------------------- Logical data 90.16G 18.05T Zero-removal saved 0 - Deduplication saved 5.25G 624.51G Compression saved 2.08G 303.46G Inlined data saved 1.35G 2.83T Preprotected physical 82.83G 14.32T Protection overhead 13.92G 2.13T Protected physical 96.74G 26.28T Zero removal ratio 1.00 : 1 - Deduplication ratio 1.06 : 1 1.03 : 1 Compression ratio 1.03 : 1 1.02 : 1 Data reduction ratio 1.09 : 1 1.05 : 1 Inlined data ratio 1.02 : 1 1.20 : 1 Efficiency ratio 0.93 : 1 0.69 : 1 --------------------- ------------- ----------------------

Be aware that the effect of data inlining is not included in the data reduction ratio because it is not actually reducing the data in any way – just relocating it and protecting it more efficiently. However, data inlining is included in the overall storage efficiency ratio.

The ‘inline data saved’ value represents the count of files which have been inlined multiplied by 8KB (inode size). This value is required to make the compression ratio and data reduction ratio correct.

- The ‘isi_cstats’ CLI command now includes the accounted number of inlined files within /ifs, which is displayed by default in its console output.

# isi_cstats

Total files : 397234451

Total inlined files : 379948336

Total directories : 32380092

Total logical data : 18471 GB

Total shadowed data : 624 GB

Total physical data : 26890 GB

Total reduced data : 14645 GB

Total protection data : 2181 GB

Total inode data : 9748 GB

Current logical data : 18471 GB

Current shadowed data : 624 GB

Current physical data : 26878 GB

Snapshot logical data : 0 B

Snapshot shadowed data : 0 B

Snapshot physical data : 32768 B

Total inlined data savings : 2899 GB

Total inlined data ratio : 1.1979 : 1

Total compression savings : 303 GB

Total compression ratio : 1.0173 : 1

Total deduplication savings : 624 GB

Total deduplication ratio : 1.0350 : 1

Total containerized data : 0 B

Total container efficiency : 1.0000 : 1

Total data reduction ratio : 1.0529 : 1

Total storage efficiency : 0.6869 : 1

Raw counts

{ type=bsin files=3889 lsize=314023936 pblk=1596633 refs=81840315 data=18449 prot=25474 ibyte=23381504 fsize=8351563907072 iblocks=0 }

{ type=csin files=0 lsize=0 pblk=0 refs=0 data=0 prot=0 ibyte=0 fsize=0 iblocks=0 }

{ type=hdir files=32380091 lsize=0 pblk=35537884 refs=0 data=0 prot=0 ibyte=1020737587200 fsize=0 iblocks=0 }

{ type=hfile files=397230562 lsize=19832702476288 pblk=2209730024 refs=81801976 data=1919481750 prot=285828971 ibyte=9446188553728 fsize=17202141701528 iblocks=379948336 }

{ type=sdir files=1 lsize=0 pblk=0 refs=0 data=0 prot=0 ibyte=32768 fsize=0 iblocks=0 }

{ type=sfile files=0 lsize=0 pblk=0 refs=0 data=0 prot=0 ibyte=0 fsize=0 iblocks=0 }

- The ‘isi get’ CLI command can be used to determine whether a file has been inlined. The output reports a file’s logical ‘size’, but indicates that it consumes zero physical, data, and protection blocks. There is also an ‘inlined data’ attribute further down in the output that also confirms that the file is inlined.

# isi get -DD file1 * Size: 2 * Physical Blocks: 0 * Phys. Data Blocks: 0 * Protection Blocks: 0 * Logical Size: 8192 PROTECTION GROUPS * Dynamic Attributes (6 bytes): * ATTRIBUTE OFFSET SIZE Policy Domains 0 6 INLINED DATA 0,0,0:8192[DIRTY]#1 *************************************************

So, in summary, some considerations and recommended practices for data inlining in OneFS 9.3 include the following:

- Data inlining is opportunistic and is only supported on node pools with 8KB inodes.

- No additional software, hardware, or licenses are required for data inlining.

- There are no CLI or WebUI management controls for data inlining.

- Data inlining is automatically enabled on applicable nodepools after an upgrade to OneFS 9.3 is committed.

- However, data inlining will only occur for new writes and OneFS 9.3 will not perform any inlining during the upgrade process. Any applicable small files will instead be inlined upon their first write.

- Since inode inlining is automatically enabled globally on clusters running OneFS 9.3, OneFS will recognize any diskpools with 512 byte inodes and transparently avoid inlining data on them.

- In OneFS 9.3, data inlining will not be performed on regular files during tiering, truncation, upgrade, etc.

- CloudPools Smartlink stubs, sparse files, and writable snapshot files are also not candidates for data inlining in OneFS 9.3.

- OneFS shadow stores will not apply data inlining. As such:

- Small file packing will be disabled for inlined data files.

- Cloning will work as expected with inlined data files..

- Inlined data files will not apply deduping and non-inlined data files that are once deduped will not inline afterwards.

- Certain operations may cause data inlining to be reversed, such as moving files from an 8KB diskpool to a 512 byte diskpool, forcefully allocating blocks on a file, sparse punching, etc.