On the security front, the new OneFS 9.10 release’s payload includes a refinement of the compliance levels for self-encrypting drives within a PowerScale cluster. But before we get into it, first a quick refresher on OneFS Data-at-Rest Encryption, or DARE, and FIPS compliance.

Within the IT industry, compliance with the Federal information processing standards (FIPS), denotes that a product has been certified to meet all the necessary security requirements, as defined by the National institute of standards and technology, or NIST.

A FIPS certification is not only mandated by federal agencies and departments, but is recognized globally as a hallmark of security certification. For organizations that store sensitive data, a FIPS certification may be required based on government regulations or industry standards. As companies opt for drives with a FIPS certification, they are ensured that the drives meet stringent regulatory requirements. FIPS certification is provided through the Cryptographic Module Validation Program (CMVP), which ensures that products conform to the FIPS 140 security requirements.

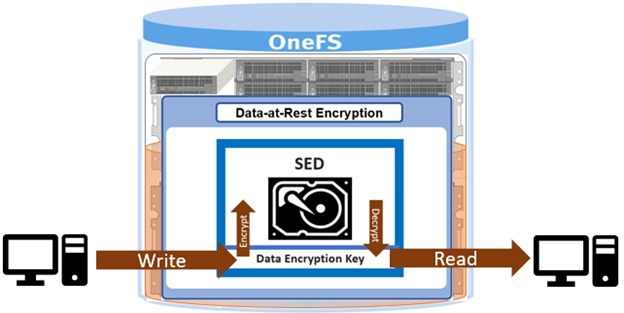

Data-At-Rest Encryption (DARE) is a requirement for federal and industry regulations ensuring that data is encrypted when it is stored. Dell PowerScale OneFS provides DARE through self-encrypting drives (SEDs) and a key management system. The data on a SED is encrypted, preventing a drive’s data from being accessed if the SED is stolen or removed from the cluster.

Data at rest is inactive data that is physically stored on persistent storage. Encrypting data at rest with cryptography ensures that the data is protected from theft if drives or nodes are removed from a PowerScale cluster. Compared to data in motion, which must be reassembled as it traverses network hops, data at rest is of interest to malicious parties because the data is a complete structure. The files have names and require less effort to understand when compared to smaller packetized components of a file.

However, because of the way OneFS lays out data across nodes, extracting data from a drive that’s been removed from a PowerScale cluster is not a straightforward process – even without encryption. Each data stripe is composed of data bits. Reassembling a data stripe requires all the data bits and the parity bit.

PowerScale implements DARE by using self-encrypting drives (SEDs) and AES 256-bit encryption keys. The algorithm and key strength meet the National Institute of Standards and Technology (NIST) standard and FIPS compliance. The OneFS management and system requirements of a DARE cluster are no different from standard clusters.

Note that the recommendation is for a PowerScale DARE cluster to solely comprise self-encrypting drive (SED) nodes. However, a cluster mixing SED nodes and non-SED nodes is supported during its transition to an all-SED cluster.

Once a cluster contains a SED node, only SED nodes can then be added to the cluster. While a cluster contains both SED and non-SED nodes, there is no guarantee that any particular piece of data on the cluster will, or will not, be encrypted. If a non-SED node must be removed from a cluster that contains a mix of SED and non-SED nodes, it should be replaced with an SED node to continue the evolution of the cluster from non-SED to SED. Adding non-SED nodes to an all-SED node cluster is not supported. Mixing SED and non-SED drives in the same node is not supported.

A SED drive provides full-disk encryption through onboard drive hardware, removing the need for any additional external hardware to encrypt the data on the drive. As data is written to the drive, it is automatically encrypted, and data read from the drive is decrypted. A chipset in the drive controls the encryption and decryption processes. An onboard chipset allows for a transparent encryption process. System performance is not affected, providing enhanced security and eliminating dependencies on system software.

Controlling access by the drive’s onboard chipset provides security if there is theft or a software vulnerability because the data remains accessible only through the drive’s chipset. At initial setup, an SED creates a unique and random key for encrypting data during writes and decrypting data during reads. This data encryption key (DEK) ensures that the data on the drive is always encrypted. Each time data is written to the drive or read from the drive, the DEK is required to encrypt and decrypt the data,. If the DEK is not available, data on the SED is inaccessible, rendering all data on the drive unreadable.

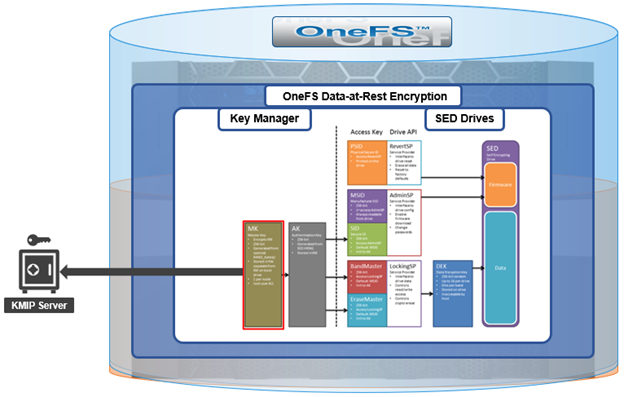

The standard SED encryption is augmented by wrapping the DEK for each SED in an authentication key (AK). As such, the AKs for each drive are placed in a key manager (KM) which is stored securely in an encrypted database, the key manager database (KMDB), further preventing unauthorized access. The KMDB is encrypted with a 256-bit universal key (UK) as follows:

OneFS also supports an external key manager by using a key management interoperability protocol (KMIP)-compliant key manager server. In this case, the universal key (UK) is stored in a KMIP-compliant server.

Note, however, that PowerScale OneFS releases prior to OneFS 9.2 retain the UK internally on the node.

Further protecting the KMDB, OneFS 9.5 and later releases also provide the ability to rekey the UK – either on-demand or per a configured schedule. This applies to both UKs that are stored on-cluster or on an external KMIP server.

The authentication key (AK) is unique to each SED, and this ensures that OneFS never knows the DEK. If there is a drive theft from a PowerScale node, the data on the SED is useless because the trifecta of the UK, AK, and the DEK, are all required to unlock the drive. If an SED is removed from a node, OneFS automatically deletes the AK. Conversely, when a new SED is added to a node, OneFS automatically assigns a new AK.

With the PowerScale H and A-series chassis-based platforms, the KMDB is stored in the node’s NVRAM, and a copy is also placed in the partner node’s NVRAM. For PowerScale F-series nodes, the KMDB is stored in the trusted platform module (TPM). Using the KM and AKs ensures that the DEKs never leave the SED boundary, as required for FIPS compliance. In contrast, legacy Gen 5 Isilon nodes store the KMDB on both compact flash drives in each node.

The key manager uses a FIPS-validated crypto when the STIG hardening profile is applied to the cluster.

The KM and KMDB are entirely secure and cannot be compromised because they are not accessible by any CLI command or script. The KMDB only stores the local drives’ AKs in Gen 5 nodes, and buddy node drives in Gen 6 nodes. On PowerEdge based nodes, the KMDB only stores the AKs of local drives. The KM also uses its encryption not to store the AKs in plain text.

OneFS external key management operates by storing the 256-bit universal key (UK) in a key management interoperability protocol (KMIP)-compliant key manager server.

In order to store the UK on a KMIP server, a PowerScale cluster requires the following:

- OneFS 9.2 (or later) cluster with SEDs

- KMIP-compliant server:

- KMIP 1.2 or later

- KMIP storage array 1.0 or later with SEDS profile

- KMIP server host/port information

- 509 PKI for TLS mutual authentication

- Certificate authority bundle

- Client certificate and private key

- Administrator privilege: ISI_PRIV_KEY_MANAGER

- Network connectivity from each node in the cluster to the KMIP server using an interface in a statically assigned network pool; for SED drives to be unlocked, each node in the cluster contacts the KMIP server at bootup to obtain the UK from the KMIP server, or the node bootup fails

- Not All Nodes On Network (NANON) and Not all Nodes On All Networks (NANOAN) clusters are not supported

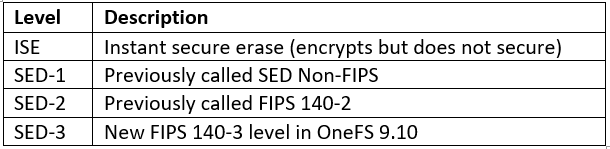

As mentioned earlier, the drive encryption levels are clarified in OneFS 9.10. There are three levels of self-encrypting drives, each now designated with a ‘SED-‘ prefix, in addition to the standard ISE (instant secure erase) drives.

These OneFS 9.10 designations include SED level 1, previously known as SED non-FIPS, SED level 2, which was formerly FIPS 140 dash 2, and SED level 3, which denotes FIPS 140-3 compliance.

Confirmation of a node’s SED level status can be verified via the ‘isi status’ CLI command output. For example, the following F710 node output indicates full SED level 3 (FIPS 140-3) compliance:

# isi status --node 1 Node LNN: 1 Node ID: 1 Node Name: tme-f710-1-1 Node IP Address: 10.1.10.21 Node Health: OK Node Ext Conn: C Node SN: DT10004 SED Compliance Level: SED-3

Similarly, the SED compliance level can be queried for individual drives with the following CLI syntax:

# isi device drive view [drive_bay_number] | grep -i compliance SED Compliance Level: SED-FIPS-140-2

Additionally, the ‘isi_psi_tool’ CLI utility can provide more detail on the required compliance level of the data and journal drives, as well as node type, etc. For example, the SED-3 SSDs in this F710 node:

# /usr/bin/isi_hwtools/isi_psi_tool -v

{

"DRIVES": [

"DRIVES_10x3840GB(pcie_ssd_sed3)"

],

"JOURNAL": "JOURNAL_SDPM",

"MEMORY": "MEMORY_DIMM_16x32GB",

"NETWORK": [

"NETWORK_100GBE_PCI_SLOT1",

"NETWORK_100GBE_PCI_SLOT3",

"NETWORK_1GBE_PCI_LOM"

],

"PLATFORM": "PLATFORM_PE",

"PLATFORM_MODEL": "MODEL_F710",

"PLATFORM_TYPE": "PLATFORM_PER660"

}

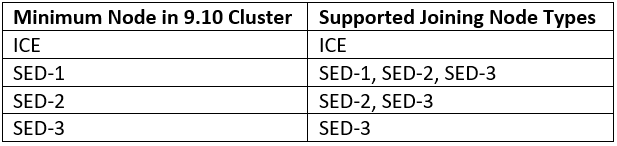

Beyond that, the existing behavior around security of nodes with regards to drive capability and the existing OneFS logic that prevents lesser security nodes from joining higher security clusters is retained. As such, the supported SED node matrix in OneFS 9.10 is as follows:

So, for example, a OneFS 9.10 cluster comprising SED-3 nodes would prevent any lesser security nodes (ie. SED-2 or below) from joining.

In addition to FIPS 140-3 data drives, the OneFS SED-3 designation also requires FIPS 140-3 compliant flash media for the OneFS filesystem journal. The presence of any incorrect drives (data or journal) in a node will result in ‘wrong type’ errors, the same as with pre-OneFS 9.10 behavior. Additionally, FIPS 104-3 (SED-3) not only requires a node’s drives to be secure, but also actively monitored. ‘Hardware mon’ is used within OneFS to monitor drive state, checking for correct security state as well as any unexpected state transitions. If hardware monitor detects any of these, it will trigger a CELOG alert and bring the node into read-only state. This will be covered in more detail in the next blog post in this series.