Over the last couple of decades, the ubiquitous network file system (NFS) protocol has become near synonymous with network attached storage. Since its debut in 1984, the technology has matured to such an extent that NFS is now deployed by organizations large and small across a broad range of critical production workloads. Currently, NFS is the OneFS file protocol with the most stringent performance requirements, serving key workloads such as EDA, artificial intelligence, 8K media editing and playback, financial services, and other branches of commercial HPC.

At its core, NFS over Remote Direct Memory Access (RDMA), as spec’d in RFC8267, enables data to be transferred between storage and clients with better performance and lower resource utilization than the standard TCP protocol. Network adapters with RDMA support, known as RNICs, allow direct data transfer with minimal CPU involvement, yielding increased throughput and reduced latency. For applications accessing large datasets on remote NFS, the benefits of RDMA include:

| Benefit | Detail |

| Low CPU utilization | Leaves more CPU cycles for other applications during data transfer. |

| Increased throughput | Utilizes high-speed networks to transfer large data amounts at line speed. |

| Low latency | Provides fast responses, making remote file storage feel more like directly attached storage. |

| Emerging technologies | Provides support for technologies such as NVIDIA’s GPUDirect, which offloads I/O directly to the client’s GPU. |

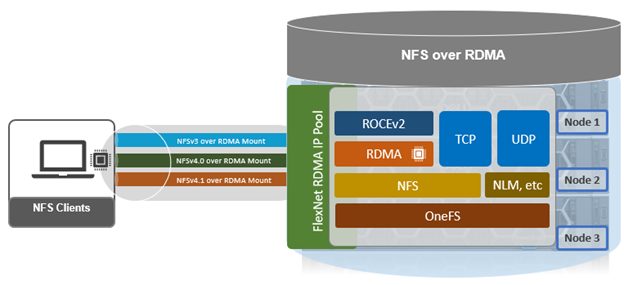

Network file system over remote direct memory access, or NFSoRDMA, provides remote data transfer directly to and from memory, without CPU intervention. PowerScale clusters have offered NFSv3 over RDMA support, and its associated performance benefits, since its introduction in OneFS 9.2. As such, enabling this functionality under OneFS allows the cluster to perform memory-to-memory transfer of data over high speed networks, bypassing the CPU for data movement and helping both reduce latency and improve throughput.

Because OneFS already had support for NFSv3 over RDMA, extending this to NFSv4.x in OneFS 9.8 focused on two primary areas:

- Providing support for NFSv4 compound operations.

- Enabling native handling of the RDMA headers which NFSv4.1 uses.

So with OneFS 9.8 and later, clients can connect to PowerScale clusters using any of the current transport protocols and NFS versions – from v3 to v4.2:

| Protocol | RDMA | TCP | UDP |

| NFS v3 | x | x | x |

| NFS v4.0 | x | x | |

| NFS v4.1 | x | x | |

| NFS v4.2 | x | x |

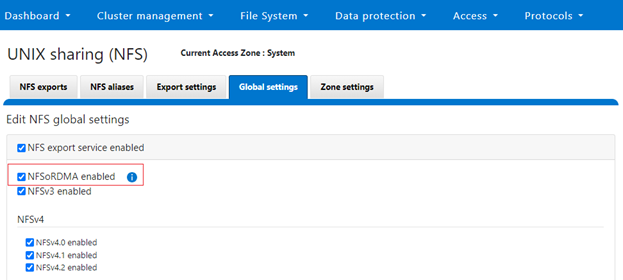

The NFS over RDMA global configuration options in both the WebUI and CLI have also been simplified and genericized, negating the need to specify a particular NFS version:

And from the CLI:

# isi nfs settings global modify --nfs-rdma-enabled=true

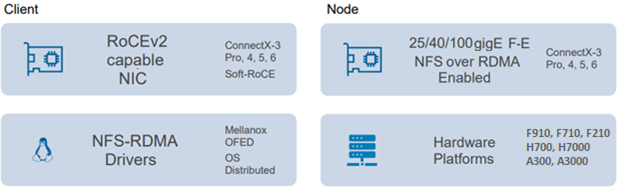

A PowerScale cluster and client must meet certain prerequisite criteria in order to use NFS over RDMA.

Specifically, from the cluster side:

| Requirement | Details |

| Node type | F210, F200, F600, F710, F900, F910, F800, F810, H700, H7000, A300, A3000 |

| Network card (NIC) | NVIDIA Mellanox ConnectX-3 Pro, ConnectX-4, ConnectX-5, ConnectX-6 network adapters which support 25/40/100 GigE connectivity. |

| OneFS version | OneFS 9.2 or later for NFSv3 over RDMA, and OneFS 9.8 or later for NFSv4.x over RDMA. |

Similarly, the OneFS NFSoRDMA implementation requires any NFS clients using RDMA to support ROCEv2 capabilities. This may be either client VMs on a hypervisor with RDMA network interfaces, or a bare-metal client with RDMA NICs. OS-wise, any Linux kernel supporting NFSv4.x and RDMA (Kver 5.3+) can be used, but RDMA-related packages such as ‘rdma-core’ and ‘libibvers-utils’ will also need to be installed. Package installation is handled via the Linux distribution’s native package manager.

| Linux Distribution | Package Manager | Package Utility |

| OpenSUSE | RPM | Zypper |

| RHEL | RPM | Yum |

| Ubuntu | Deb | Apt-get / Dpkg |

For example, on an OpenSUSE client:

# zypper install rdma-core libibvers-utils

Plus additional client configuration is also required, and this procedure is covered in detail below.

In addition to a new ‘nfs-rdma-enabled’ NFS global config setting (deprecating the prior ‘nfsv3-rdma-enabled setting), OneFS 9.8 also adds a new ‘nfs-rroce-only’ network pool setting. This allows the creation of an RDMA-only network pool that can only contain RDMA-capable interfaces. For example:

# isi network pools modify <pool_id> --nfs-rroce-only true

This is ideal for NFS failover purposes because it can ensure that a dynamic pool will only fail over to an RDMA-capable interface.

OneFS 9.8 also introduces a new NFS over RDMA CELOG event:

# isi event types list | grep -i rdma 400140003 SW_NFS_CLUSTER_NOT_RDMA_CAPABLE 400000000 To use the NFS-over-RDMA feature, the cluster must have an RDMA-capable front-end Network Interface Card.

This event will fire if the cluster transitions from being able to support RDMA to not, or if attempting to enable RDMA on a non-capable cluster. The previous ‘SW_NFSV3_CLUSTER_NOT_RDMA_CAPABLE’ in OneFS 9.7 and earlier is also deprecated.

When it comes to TCP/UDP port requirements for NFS over RDMA, any environments with firewalls and/or packet filtering deployed should ensure the following ports are open between PowerScale cluster and NFS client(s):

| Port | Description |

| 4791 | RoCEv2 (UDP) for RDMA payload encapsulation. |

| 300 | Used by NFSv3 mount service. |

| 302 | Used by NFSv3 network status monitor (NSM). |

| 304 | Used by NFSv3 network lock manager (NLM). |

| 111 | RPC portmapper for locating services like NFS and mountd. |

NFSv4 over RDMA does not add any new ports or outside cluster interfaces or interactions to OneFS, and RDMA should not be assumed to be more or less secure than any other transport type. For maximum security, NFSv4 over RDMA can be configured to use a central identity manager such as Kerberos.

Telemetry-wise, the ‘isi statistics’ configuration in OneFS 9.8 includes a new ‘nfsv4rdma’ switch for v4, in addition to the legacy ‘nfsrdma’ (where ‘nfs4rdma’ includes all the 4.0, 4.1 and 4.2 statistics).

The new NFSv4 over RDMA CLI statistics options in OneFS 9.8 include:

| Command Syntax | Description |

| isi statistics client list –protocols=nfs4rdma | Display NFSv4oRDMA cluster usage statistics organized according to cluster hosts and users. |

| isi statistics protocol list –protocols=nfs4rdma | Display cluster usage statistics for NFSv4oRDMA |

| isi statistics pstat list –protocol=nfs4rdma | Generate detailed NFSv4oRDMA statistics along with CPU, OneFS, network and disk statistics. |

| isi statistics workload list –dataset= –protocols=nfs4rdma | Display NFSv4oRDMA workload statistics for specified dataset(s). |

For example:

# isi statistics client list --protocols nfs4rdma Ops In Out TimeAvg Node Proto Class UserName LocalName RemoteName------------------------------------------------------------------------------------------------------------------629.8 13.4k 62.1k 711.0 8 nfs4rdma namespace_read user_55 10.2.50.65 10.2.50.165605.6 16.9k 59.5k 594.9 4 nfs4rdma namespace_read user_254 10.2.50.66 10.2.50.166451.0 3.7M 41.5k 1948.5 1 nfs4rdma write user_74 10.2.50.72 10.2.50.172240.7 662.8k 18.1k 279.4 8 nfs4rdma create user_55 10.2.50.65 10.2.50.165

Additionally, session-level visibility and additional metrics can be gleaned from the ‘isi_nfs4mgmt’ utility, provides insight on a client’s cache state. The command output shows which clients are connected via RDMA or TCP from the server, in addition to their version. For example:

# isi_nfs4mgmt ID Vers Conn SessionId Client Address Port O-Owners Opens Handles L-Owners 1196363351478045825 4.0 tcp - 10.1.100.110 856 1 7 10 0 1196363351478045826 4.0 tcp - 10.1.100.112 872 0 0 0 0 2940493934191674019 4.2 rdma 3 10.2.50.227 40908 0 0 0 0 2940493934191674022 4.1 rdma 5 10.2.50.224 60152 0 0 0 0

The output above indicates two NFSv4.0 TCP sessions, plus one NFSv4.1 RDMA session and one NFSv4.1 RDMA session.

Used with the ‘—dump’ flag and client ID, isi_nfs4mgt will provide detailed information for a particular session:

# isi_nfs4mgmt --dump 2940493934191674019 Dump of client 2940493934191674019 Open Owners (0): Session ID: 3 Forward Channel Connections: Remote: 10.2.50.227.40908 Local: 10.2.50.98.20049 ....

Note that the ‘isi_nfs4mgmt’ tool is specific to stateful NFSv4 sessions and will not list any stateless NFV3 activity.

In the next article in this series, we’ll explore the procedure for enabling NFS over RDMA on a PowerScale cluster.