A number of scientific computing and commercial HPC customers use Infiniband as their principal network transport, and to date have used Infiniband bridges and gateways to connect their IB-attached compute server fleets to their PowerScale clusters. To address this, OneFS 9.10 introduces support for low latency HDR Infiniband front-end network connectivity on the F710 and F910 all-flash platforms, providing up to 200Gb/s of bandwidth with sub-microsecond latency. This can directly benefit generative AI and machine learning environments, plus other workloads – particularly those involving highly concurrent streaming reads and writes.

In conjunction with the OneFS multipath driver, plus GPUDirect support, the choice of either HDR Infiniband or 200Gig Ethernet can satisfy the networking and data requirements of demanding technical workloads, such as autonomous driving model training, seismic analysis, and complex transformer-based AI workloads, and deep learning systems.

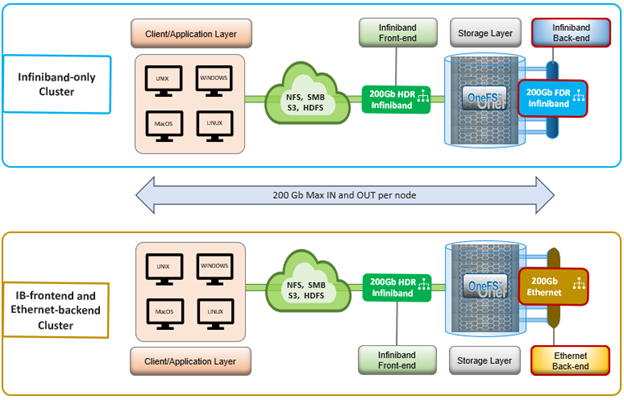

Specifically, this new functionality expands cluster front-end connectivity to include Infiniband, in addition to Ethernet, and enables the use of IP over IB and NFSoRDMA over IB.

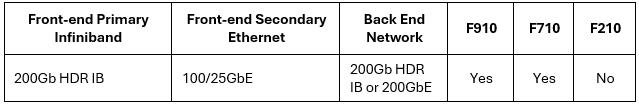

With its debut in OneFS 9.10, front-end IB support is currently offered on both the PowerScale F710 and F910 all-flash nodes:

These two platforms can now host a Mellanox CX-6 VPI NIC configured for HDR Infiniband in the primary front-end PCI slot, plus a CX-6 DX NIC configured for Ethernet in the secondary front-end PCI slot. This is worth noting, since in an ethernet only node, the primary port is typically used for Gb Ethernet, while the secondary slot is unpopulated. For performance reasons, this configuration ensures that the primary interface makes use of the full bandwidth of the Gen5 PCIe slot, while the secondary slot offers PCIe Gen4 connectivity, which limits it to 100Gb. Additionally, in OneFS 9.10, the node’s backend network, used for intra-cluster communication, can also now be configured for either HDR Infiniband or 200Gb Ethernet.

Prior to OneFS 9.10, there were several components within OneFS that assumed Infiniband was synonymous with only the backend network. This included the platform support interface (PSI) that is used to get information on the running platform, and which contained a single ‘network.interfaces.infiniband‘ key for Infiniband in OneFS 9.9 and earlier releases. To rectify this, the psi.conf in OneFS 9.10 now includes a pair of ‘network.interfaces.infininband.frontend’ and ‘network.interfaces.infiniband.backend’ keys, mirroring the corresponding key pair for the ethernet front and backend networks.

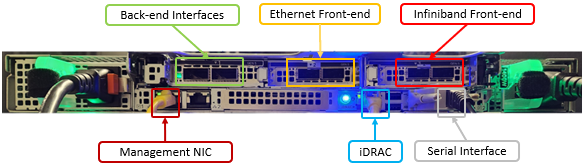

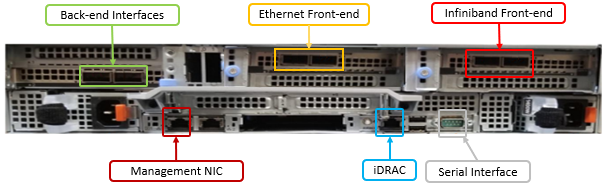

Here’s the rear view of both the F710 and 910 platforms, showing the slot locations of the front and back-end NICs in the Infiniband configuration. Note that both F710 and F910 nodes configured with Infiniband frontend support are automatically shipped with both an HDR IB card in the primary front-end slot (red) and a 100Gb Ethernet NIC in the secondary front-end slot.

F710

F910

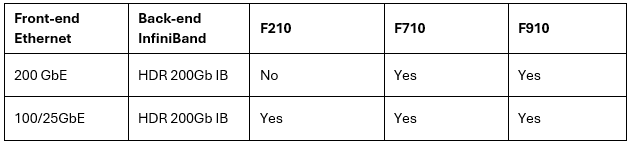

Note that the PowerScale F210 does not support either front-end Infiniband, or 200Gig Ethernet at this point. However, this is not a platform constraint, but rather a qualification effort limitation.

However, OneFS 9.10 does provides support for either backend HDR Infiniband or 200Gb Ethernet on all the current F-series platforms, including the F210 node.

Backend HDR Infiniband support is again using the venerable CX-6 VPI 200Gb NIC, paired with the Quantum QM8790 IB switch, and using supplied 200Gb HDR cables.

Note though, that the QM 8790 switch only provides 40 HDR ports, and breakout cables are not currently supported. So, for now, an F-series cluster with an IB backend running OneFS 9.10 will be limited to a maximum of forty nodes. It’s also worth mentioning that the QM 8790 switch does support lower IB data rates such as QDR and FDR, and has also been qualified with the legacy CX3 VPI NICs and IB cables for legacy IB cluster compatibility and migration purposes.

From the CLI, the isi_hw_status command can be used to report on a node’s front and back-end networking types. For example, the following output from an F710 shows the front-end network ‘FEType’ parameter as ‘Infiniband’.

# isi_hw_status | grep Type BEType: Infiniband FEType: 200GigE

However, the back-end network on this F710 is 200Gb Ethernet, as reported by the ‘BEType’ parameter.

In the next article in this series, we’ll look at the configuration and management of front-end Infiniband in OneFS 9.10