In this final article in the Dell Technologies Connectivity Services (DTCS) for OneFS series, we turn our attention to management and troubleshooting.

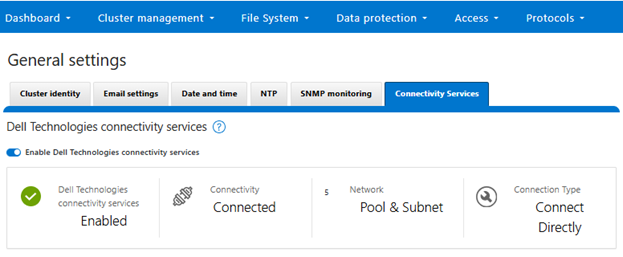

Once the provisioning process above is complete, the ‘isi connectivity settings view’ CLI command reports the status and health of DTCS operations on the cluster.

# isi connectivity settings view Service enabled: Yes Connection State: enabled OneFS Software ID: xxxxxxxxxx Network Pools: subnet0:pool0 Connection mode: direct Gateway host: - Gateway port: - Backup Gateway host: - Backup Gateway port: - Enable Remote Support: Yes Automatic Case Creation: Yes Download enabled: Yes

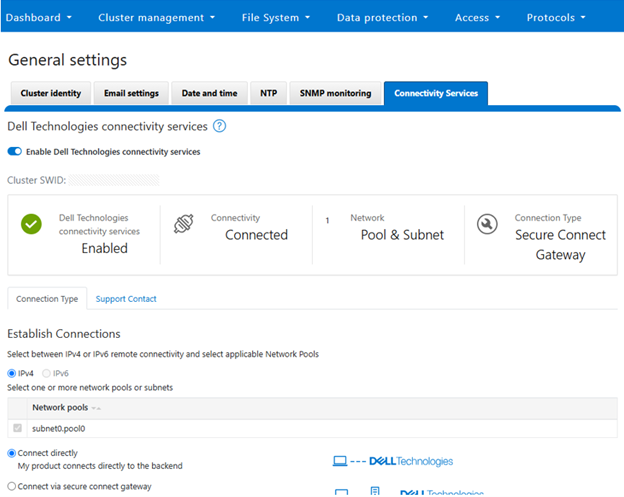

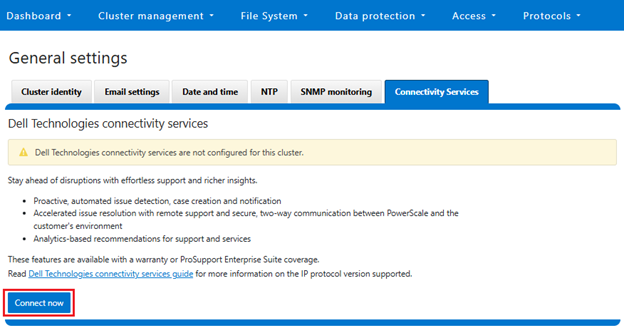

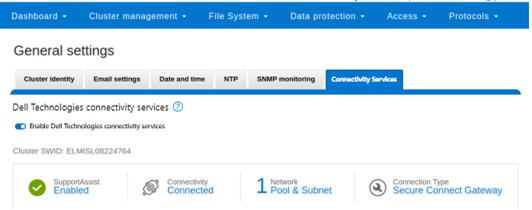

This can also be obtained from the WebUI by navigating to Cluster management > General settings > Connectivity services:

There are some caveats and considerations to keep in mind when upgrading to OneFS 9.10 or later and enabling DTCS, including:

- DTCS is disabled when STIG Hardening applied to cluster

- Using DTCS on a hardened cluster is not supported

- Clusters with the OneFS network firewall enabled (‘isi network firewall settings’) may need to allow outbound traffic on port 9443.

- DTCS is supported on a cluster that’s running in Compliance mode

- Secure keys are held in Key manager under the RICE domain

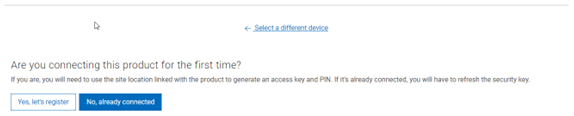

Also, note that ESRS can no longer be used after DTCS has been provisioned on a cluster.

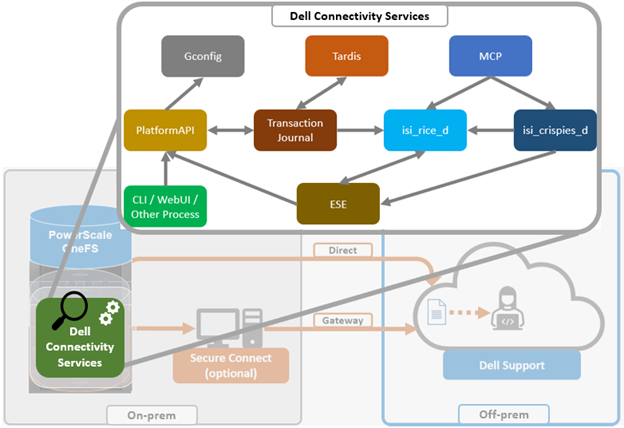

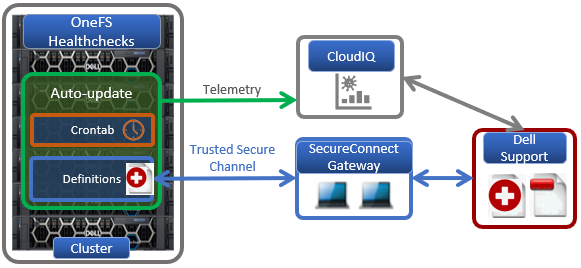

DTCS has a variety of components that gather and transmit various pieces of OneFS data and telemetry to Dell Support and backend services through the Embedded Service Enabler (ESE. These workflows include CELOG events; In-product activation (IPA) information; CloudIQ telemetry data; Isi-Gather-info (IGI) logsets; and provisioning, configuration, and authentication data to ESE and the various backend services.

| Activity | Information |

| Events and alerts | DTCS can be configured to send CELOG events.. |

| Diagnostics | The OneFS isi diagnostics gather and isi_gather_info logfile collation and transmission commands have a DTCS option. |

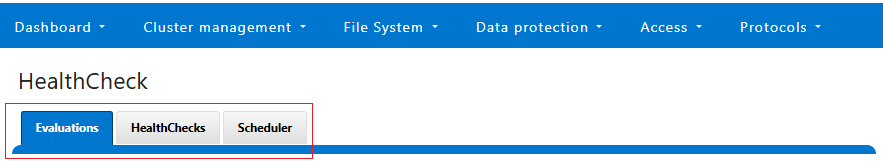

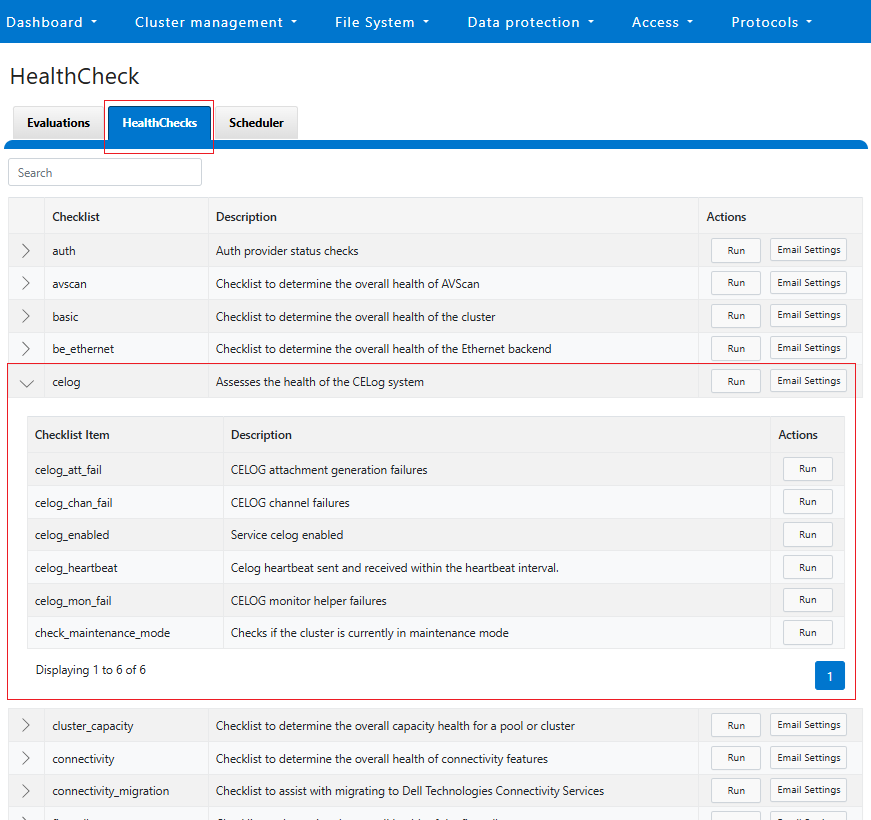

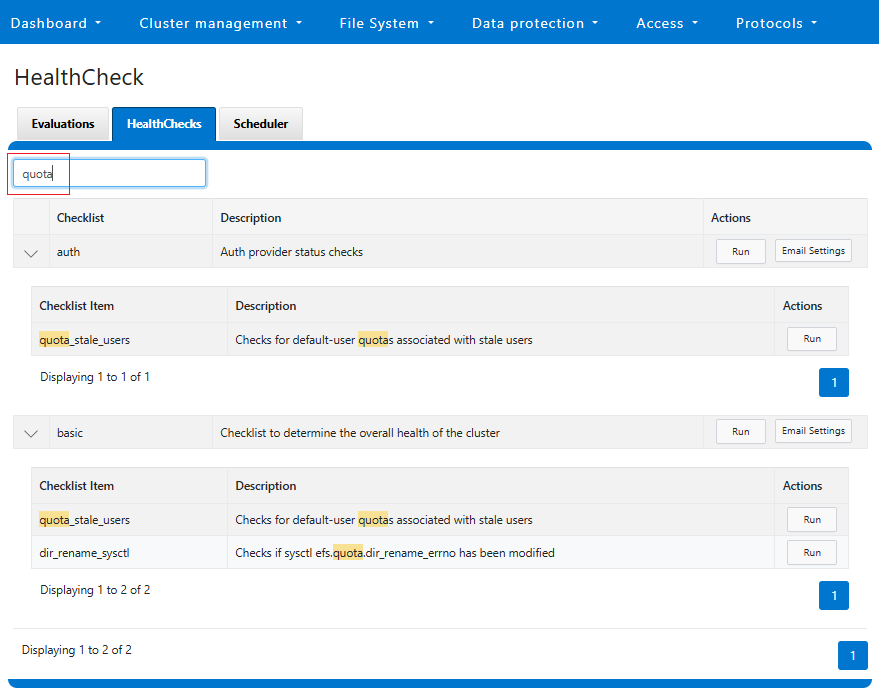

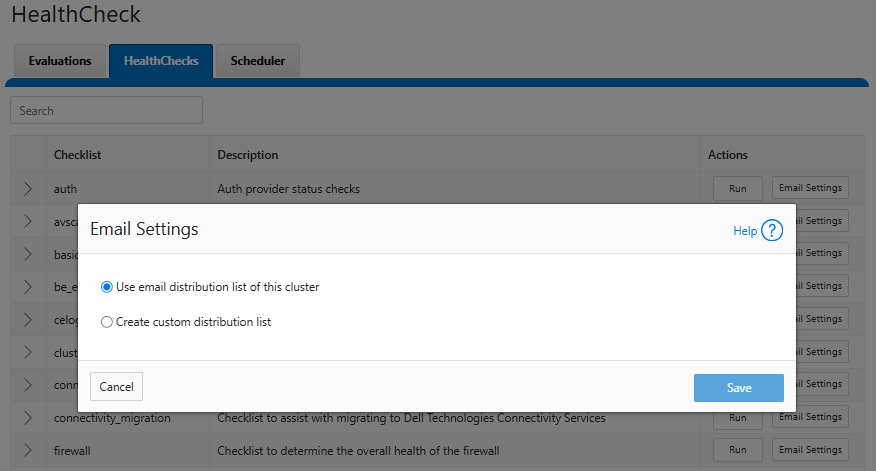

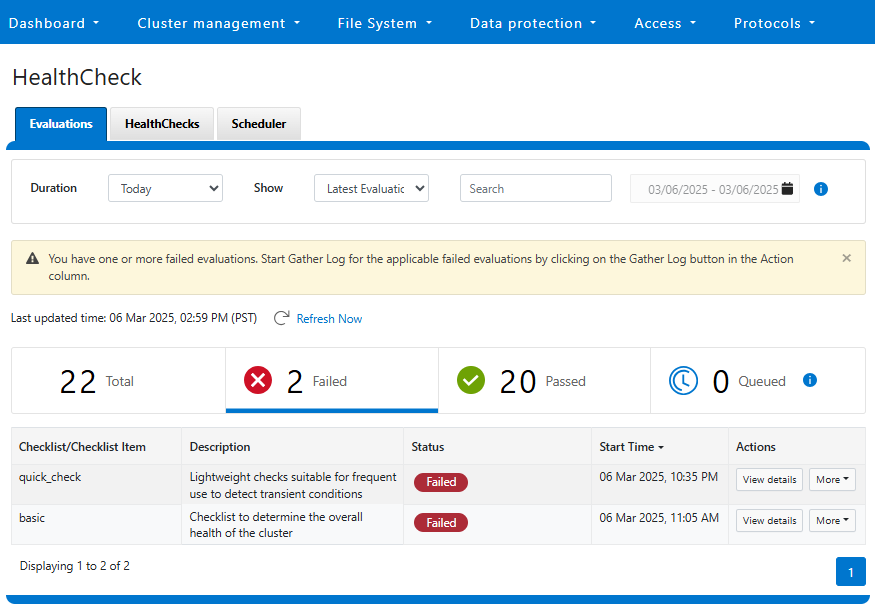

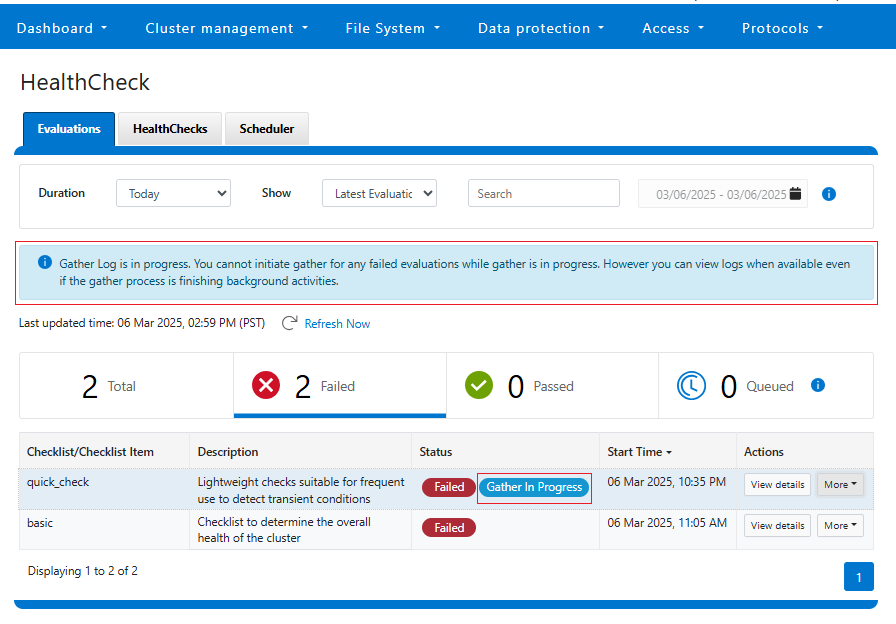

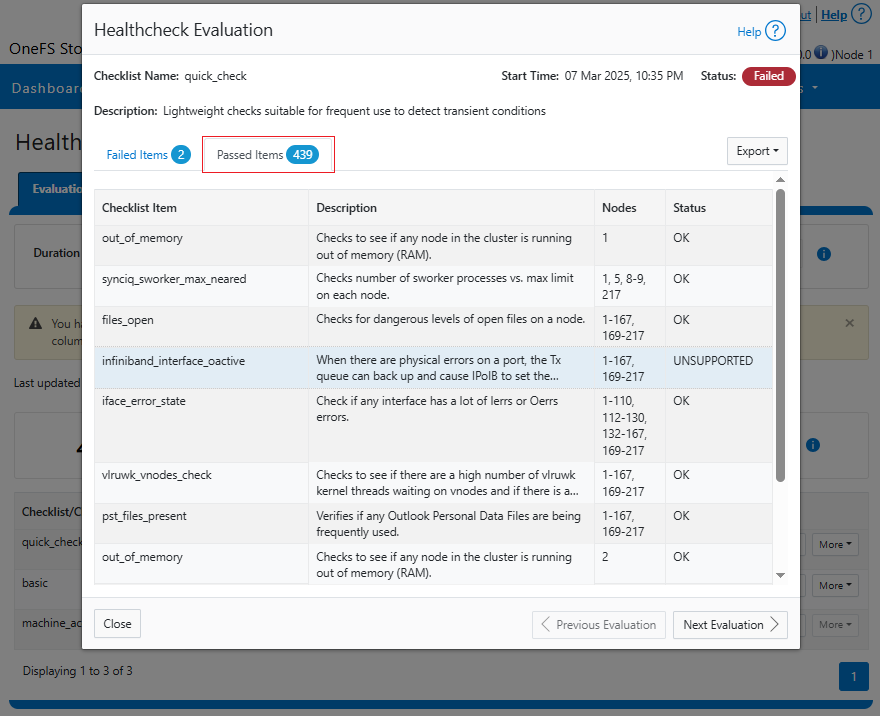

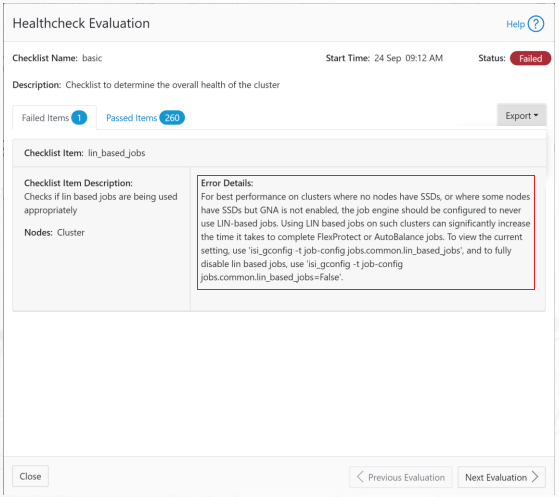

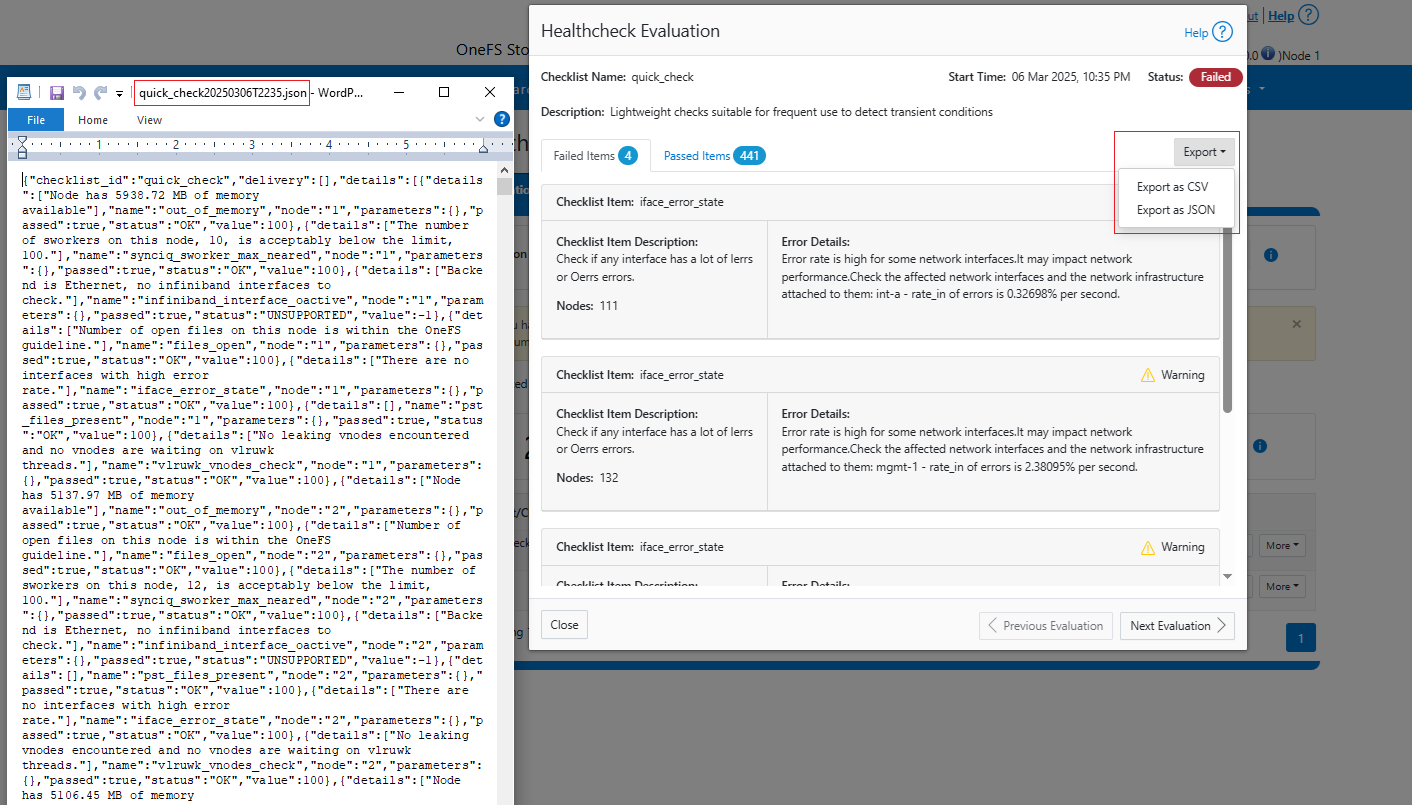

| Healthchecks | HealthCheck definitions are updated using DTCS . |

| License Activation | The isi license activation start command uses DTCS to connect. |

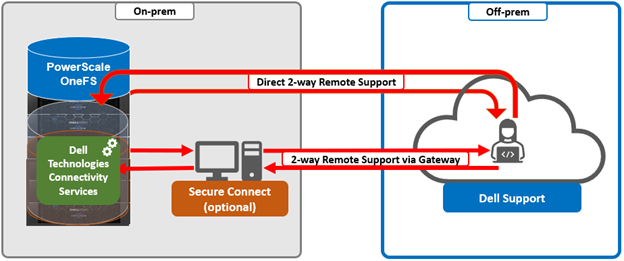

| Remote Support | Remote Support uses DTCS and the Connectivity Hub to assist customers with their clusters. |

| Telemetry | CloudIQ telemetry data is sent using DTCS. |

CELOG

Once DTCS is up and running, it can be configured to send CELOG events and attachments via ESE to CLM. This can be managed by the ‘isi event channels’ CLI command syntax. For example:

# isi event channels list ID Name Type Enabled ------------------------------------------------------------------ 2 Heartbeat Self-Test heartbeat Yes 3 Dell Technologies connectivity services connectivity No ------------------------------------------------------------------ Total: 2 # isi event channels view "Dell Technologies connectivity services" ID: 3 Name: Dell Technologies connectivity services Type: connectivity Enabled: No

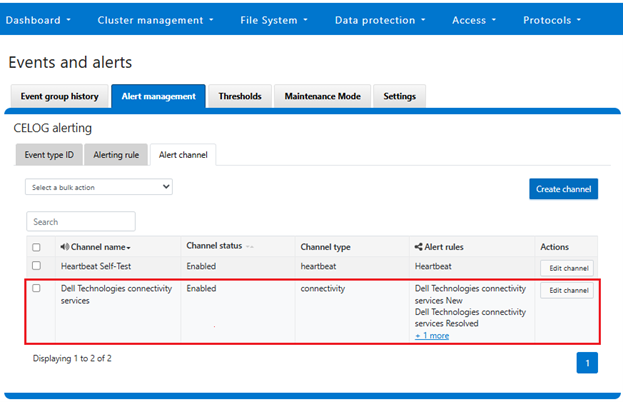

Or from the WebUI:

CloudIQ Telemetry

DTCS provides an option to send telemetry data to CloudIQ. This can be enabled from the CLI as follows;

# isi connectivity telemetry modify --telemetry-enabled 1 --telemetry-persist 0 # isi connectivity telemetry view Telemetry Enabled: Yes Telemetry Persist: No Telemetry Threads: 8 Offline Collection Period: 7200

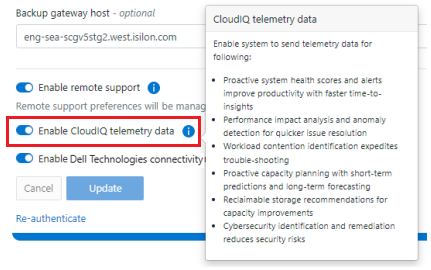

Or via the DTCS WebUI:

Diagnostics Gather

Also, the ‘isi diagnostics gather’ and isi_gather_info CLI commands both now include a ‘–connectivity’ upload option for log gathers, which also allows them to continue to function when the cluster is unhealthy via a new ‘Emergency mode’. For example, to start a gather from the CLI that will be uploaded via DTCS:

# isi diagnostics gather start -–connectivity 1

Similarly, for ISI gather info:

# isi_gather_info --connectivity

Or to explicitly avoid using DTCS for ISI gather info log gather upload:

# isi_gather_info --noconnectivity

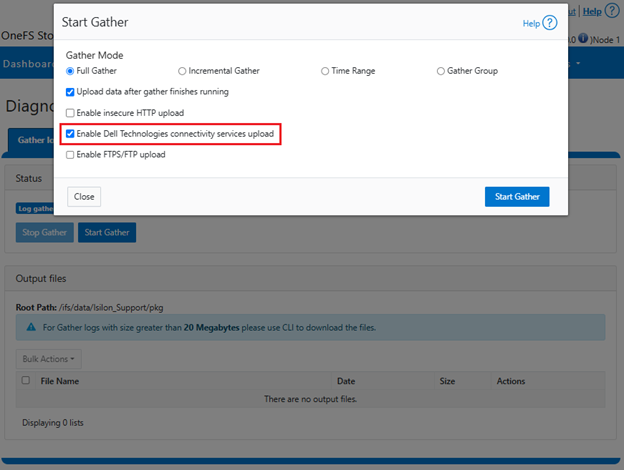

This can also be configured from the WebUI via Cluster management > General configuration > Diagnostics > Gather:

License Activation through DTCS

PowerScale License Activation (previously known as In-Product Activation) facilitates the management of the cluster’s entitlements and licenses by communicating directly with Software Licensing Central via DTCS. Licenses can either be activated automatically or manually.

The procedure for automatic activation includes:

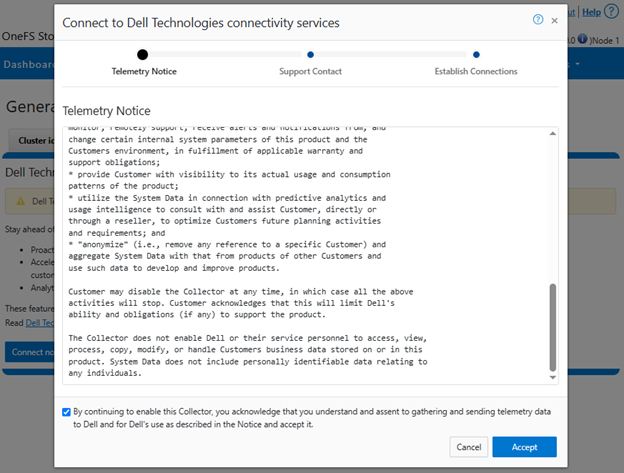

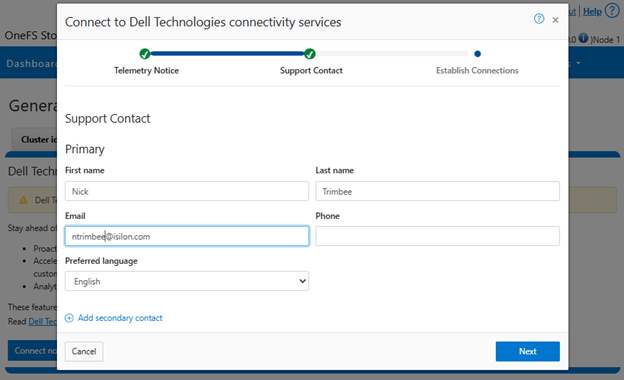

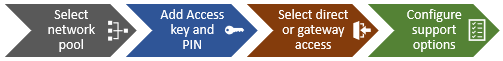

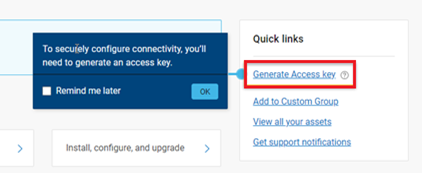

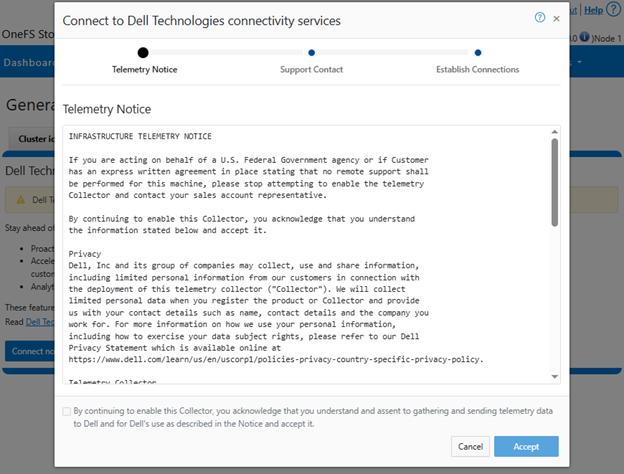

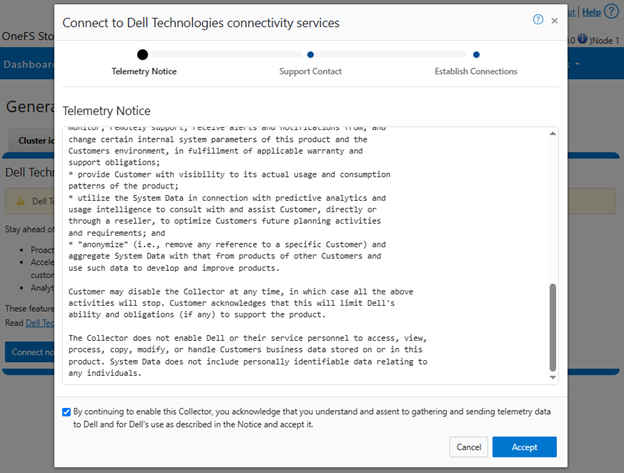

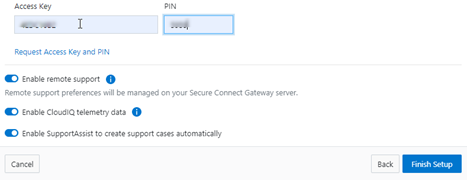

Step 1: Connect to Dell Technologies Connectivity Services

Step 2: Get a License Activation Code

Step 3: Select modules and activate

Similarly, for manual activation:

Step 1: Download the Activation file

Step 2: Get Signed License from Dell Software Licensing Central

Step 3: Upload Signed License

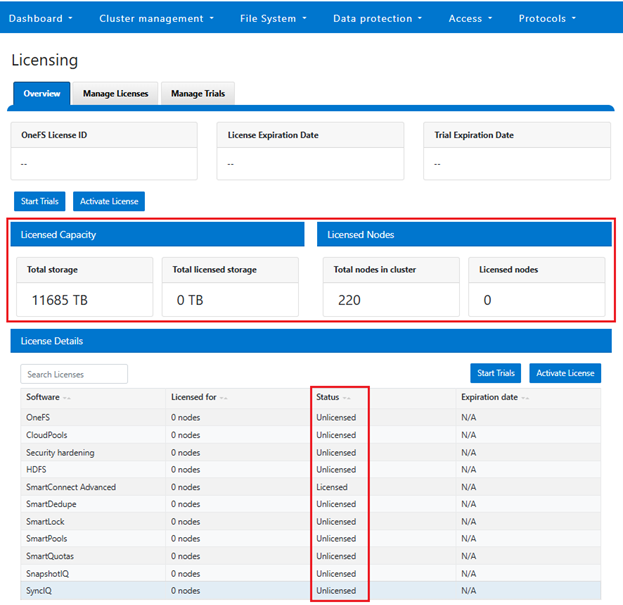

To activate OneFS product licenses through the DTCS WebUI, navigate to Cluster management > Licensing. For example, on a new cluster without any signed licenses:

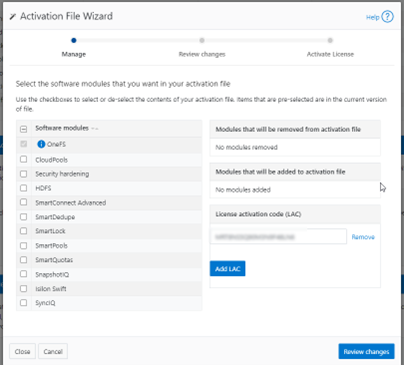

Click the button Update & Refresh in the License Activation section. In the ‘Activation File Wizard’, select the desired software modules.

Next select ‘Review changes’, review, click ‘Proceed’, and finally ‘Activate’.

Note that it can take up to 24 hours for the activation to occur.

Alternatively, cluster License activation codes (LAC) can also be added manually.

Troubleshooting

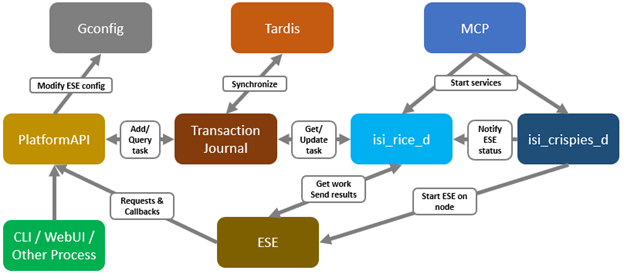

When it comes to troubleshooting DTCS, the basic process flow is as follows:

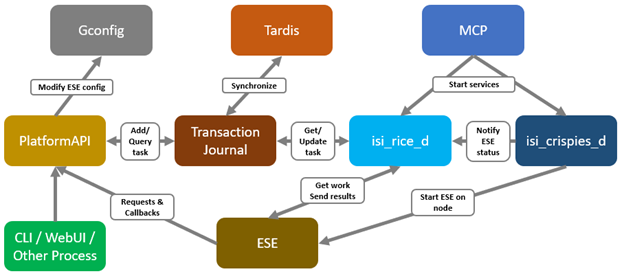

The OneFS components and services above are:

| Component | Info |

| ESE | Embedded Service Enabler. |

| isi_rice_d | Remote Information Connectivity Engine (RICE). |

| isi_crispies_d | Coordinator for RICE Incidental Service Peripherals including ESE Start. |

| Gconfig | OneFS centralized configuration infrastructure. |

| MCP | Master Control Program – starts, monitors, and restarts OneFS services. |

| Tardis | Configuration service and database. |

| Transaction journal | Task manager for RICE. |

Of these, ESE, isi_crispies_d, isi_rice_d, and the Transaction Journal are exclusive to DTCS and its predecessor, SupportAssist. In contrast, Gconfig, MCP, and Tardis are all legacy services that are used by multiple other OneFS components.

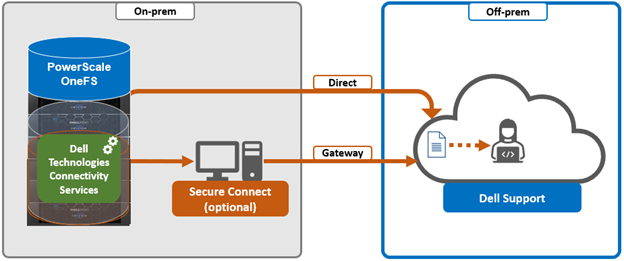

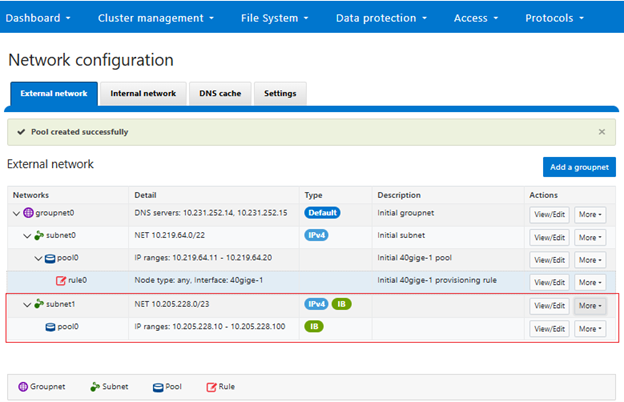

For its connectivity, DTCS elects a single leader single node within the subnet pool, and NANON nodes are automatically avoided. Ports 443 and 8443 are required to be open for bi-directional communication between the cluster and Connectivity Hub, and port 9443 is for communicating with a gateway. The DTCS ESE component communicates with a number of Dell backend services

- SRS

- Connectivity Hub

- CLM

- ELMS/Licensing

- SDR

- Lightning

- Log Processor

- CloudIQ

- ESE

Debugging backend issues may involve one or more services, and Dell Support can assist with this process.

The main log files for investigating and troubleshooting DTCS issues and idiosyncrasies are isi_rice_d.log and isi_crispies_d.log. These is also an ese_log, which can be useful, too. These can be found at:

| Component | Logfile Location | Info |

| Rice | /var/log/isi_rice_d.log | Per node |

| Crispies | /var/log/isi_crispies_d.log | Per node |

| ESE | /ifs/.ifsvar/ese/var/log/ESE.log | Cluster-wise for single instance ESE |

Debug level logging can be configured from the CLI as follows:

# isi_for_array isi_ilog -a isi_crispies_d --level=debug+ # isi_for_array isi_ilog -a isi_rice_d --level=debug+

Note that the OneFS log gathers (such as the output from the isi_gather_info utility) will capture all the above log files, plus the pertinent DTCS Gconfig contexts and Tardis namespaces, for later analysis.

If needed, the Rice and ESE configurations can also be viewed as follows:

# isi_gconfig -t ese

[root] {version:1}

ese.mode (char*) = direct

ese.connection_state (char*) = disabled

ese.enable_remote_support (bool) = true

ese.automatic_case_creation (bool) = true

ese.event_muted (bool) = false

ese.primary_contact.first_name (char*) =

ese.primary_contact.last_name (char*) =

ese.primary_contact.email (char*) =

ese.primary_contact.phone (char*) =

ese.primary_contact.language (char*) =

ese.secondary_contact.first_name (char*) =

ese.secondary_contact.last_name (char*) =

ese.secondary_contact.email (char*) =

ese.secondary_contact.phone (char*) =

ese.secondary_contact.language (char*) =

(empty dir ese.gateway_endpoints)

ese.defaultBackendType (char*) = srs

ese.ipAddress (char*) = 127.0.0.1

ese.useSSL (bool) = true

ese.srsPrefix (char*) = /esrs/{version}/devices

ese.directEndpointsUseProxy (bool) = false

ese.enableDataItemApi (bool) = true

ese.usingBuiltinConfig (bool) = false

ese.productFrontendPrefix (char*) = platform/16/connectivity

ese.productFrontendType (char*) = webrest

ese.contractVersion (char*) = 1.0

ese.systemMode (char*) = normal

ese.srsTransferType (char*) = ISILON-GW

ese.targetEnvironment (char*) = PROD

And for ‘rice’.

# isi_gconfig -t rice

[root] {version:1}

rice.enabled (bool) = false

rice.ese_provisioned (bool) = false

rice.hardware_key_present (bool) = false

rice.connectivity_dismissed (bool) = false

rice.eligible_lnns (char*) = []

rice.instance_swid (char*) =

rice.task_prune_interval (int) = 86400

rice.last_task_prune_time (uint) = 0

rice.event_prune_max_items (int) = 100

rice.event_prune_days_to_keep (int) = 30

rice.jnl_tasks_prune_max_items (int) = 100

rice.jnl_tasks_prune_days_to_keep (int) = 30

rice.config_reserved_workers (int) = 1

rice.event_reserved_workers (int) = 1

rice.telemetry_reserved_workers (int) = 1

rice.license_reserved_workers (int) = 1

rice.log_reserved_workers (int) = 1

rice.download_reserved_workers (int) = 1

rice.misc_task_workers (int) = 3

rice.accepted_terms (bool) = false

(empty dir rice.network_pools)

rice.telemetry_enabled (bool) = true

rice.telemetry_persist (bool) = false

rice.telemetry_threads (uint) = 8

rice.enable_download (bool) = true

rice.init_performed (bool) = false

rice.ese_disconnect_alert_timeout (int) = 14400

rice.offline_collection_period (uint) = 7200

The ‘-q’ flag can also be used in conjunction with the isi_gconfig command to identify any values that are not at their default settings. For example, the stock (default) Rice gconfig context will not report any configuration entries:

# isi_gconfig -q -t rice

[root] {version:1}

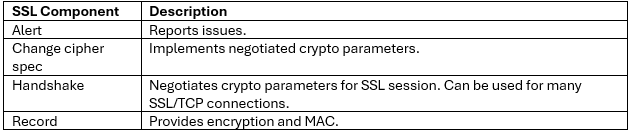

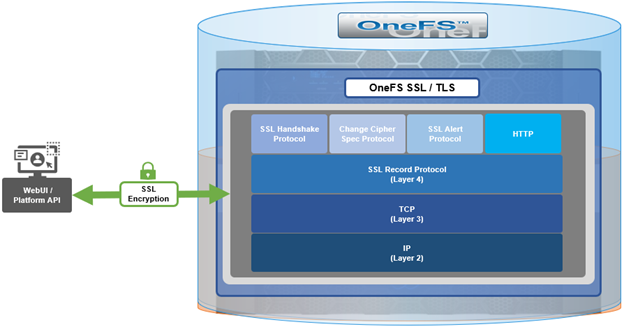

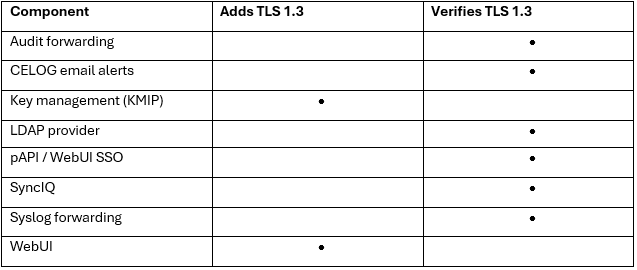

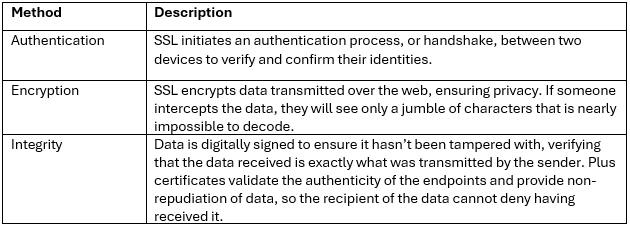

When using either the OneFS WebUI or platform API (pAPI), all communication sessions are encrypted using SSL and the related Transport Layer Security (TLS). As such, SSL and TLS play a critical role in PowerScale’s Zero Trust architecture by enhancing security via encryption, validation, and digital signing.

When using either the OneFS WebUI or platform API (pAPI), all communication sessions are encrypted using SSL and the related Transport Layer Security (TLS). As such, SSL and TLS play a critical role in PowerScale’s Zero Trust architecture by enhancing security via encryption, validation, and digital signing.